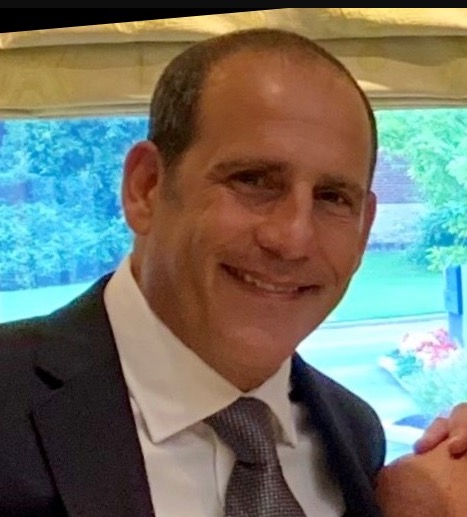

‘Humiliated’ NY lawyer who used ChatGPT for ‘bogus’ court doc profusely apologizes

A New York attorney who used ChatGPT to write a legal brief — citing bogus cases — profusely apologized in court Thursday, becoming emotional as he explained being “duped” by the artificial intelligence chatbot.

Steven Schwartz, of Tribeca law firm Levidow, Levidow & Oberman, told a Manhattan federal judge that he was “humiliated” by the flub, saying he believed he was using a “search engine” and never fathomed the AI app would provide fake case law.

“I would like to sincerely apologize to your honor, to this court, to the defendants, to my firm,” Schwartz said during the packed court hearing.

“I deeply regret my actions in this manner that led to this hearing today,” Schwartz said, voice shaking. “I suffered both professionally and personally [because of] the widespread publicity this issue has generated. I am both embarrassed, humiliated and extremely remorseful.”

Schwartz used ChatGPT to help him find case law to bolster his client’s lawsuit, but the bot completely fabricated the cases, unbeknownst to the attorney.

He ultimately filed the legal brief citing the fictitious cases, prompting Judge Kevin Castel to haul him into court for a hearing on whether to sanction the firm over the snafu.

Schwartz was forced to pause and compose himself as he made the apology.

“I have never been involved in anything like this in my 30 years,” said Schwartz, who was forced to pause and compose himself as he made the apology.

“I can assure this court that nothing like this will happen again,” he insisted to the judge.

Schwartz had filed the court brief in his firm’s 2022 case representing Robert Mata, who sued Colombian airline Avianca claiming he was injured when a metal serving cart hit his knee on a flight to New York City.

Some of the fictitious cases that Schwartz cited included, Miller v. United Airlines, Petersen v. Iran Air and Varghese v. China Southern Airlines.

Mata’s suit is still pending.

Castel grilled Schwartz for roughly two hours Thursday on how he could have allowed such a mishap to happen.

Schwartz repeatedly insisted he never imagined ChatGPT would completely fabricate case law.

“I just never could imagine that ChatGPT would fabricate cases,” Schwartz said. “My assumption was I was using a search engine that was using sources I don’t have access to.”

Schwartz said that ChatGPT didn’t specify the cases weren’t real, adding “I continued to be duped by ChatGPT.”

“It just never occurred to me it would be making up cases,” he explained. “I just assumed it couldn’t access the full case.”

Schwartz said he never would have submitted the brief if he thought the case law wasn’t real, but admitted he should have done further due diligence first.

“In hindsight, God I wish I did that, but I didn’t do that,” he said.

Schwartz also admitted that he couldn’t find the full cases on the internet, but said he thought they could have been on appeal or unpublished cases.

At one point Castel read an excerpt from one of the made-up cases and pressed Schwartz: “Can we agree that’s legal gibberish?”

“Looking at it now, yes,” Schwartz admitted.

Schwartz swore off using the app in the future and also said he’d taken an AI training course to improve his knowledge of technology.

Thomas Corbino, another lawyer at the firm, told the judge the company had never been sanctioned before and that Schwartz had always been a standup lawyer.

Another lawyer for the firm, Ronald Minkoff, told the judge lawyers are notoriously bad with technology.

“There was no intention misconduct here,” Minkoff said. “This was the result of ignorance and carelessness. It was not intentional and certainly not in bad faith.”

The judge said he would rule on whether to issue sanctions at a later date.