100,000 new SUVs booked in 30 minutes: How Mahindra built its online order system

Mandar Samant

Specialist CE Manager, Google Cloud

Bhuwan Lodha

Senior VP, Chief Digital Officer - Automotive, Mahindra Group

Almost 70 years ago, starting in 1954, the Mahindra Group began assembling the Indian version of the Willys CJ3. Willys were arguably the first SUV in any country, and that vehicle would lay the groundwork for our Automotive Division’s continued leadership in the space, up to our iconic and best-selling Scorpio models.

When it came time to launch the newest version, the Scorpio-N this summer, we knew we wanted to attempt something as special and different as the vehicle itself. As in most markets, vehicle sales in India are largely made through dealerships. Yet past launches have shown us an enthusiasm among our tech-savvy buyers to have a different kind of sales experience, not unlike those they have come to expect from so many other products.

As a result, we set out to build a first-of-its-kind site for digital bookings. We knew it would face a serious surge, like most e-commerce sites on a big sales day, but that was the kind of traffic automotive sites are hardly accustomed to.

To our delight, the project exceeded our wildest expectations, setting digital sales records in the process. On launch day, July 30, 2022, we saw more than 25,000 booking requests in the first minute, and 100,000 booking requests in the first 30 minutes, totaling USD 2.3 billion in car sales.

At its peak, there were around 60,000 concurrent users on the booking platform trying to book the vehicle. Now let’s look under the hood of how to create a platform robust and scalable enough to handle an e-commerce-level rush for the automotive industry.

A cloud-first approach to auto sales and architecture

Our aim was to build a clean, lean, and highly efficient system, which is also fast, robust, and scalable. And to achieve it, we went back to the drawing board to remove all the inefficiencies in our existing online and dealer booking processes. We put on our design thinking hats, to give our customers and dealers a platform that was meant to be used during high rush launches and does the only thing which mattered during launch day: the ability for all to book a vehicle swiftly and efficiently.

While order booking use cases are quite common development scenarios, our particular challenge was to handle a large volume of orders in a very short time, and ensure almost immediate end-user response times. Each order required a sequence of business logic checks, customer notifications, payment flow, and interaction with our CRM systems. We knew we needed to build a cloud-first solution that could scale to meet the surge and then rapidly scale down once the rush was over.

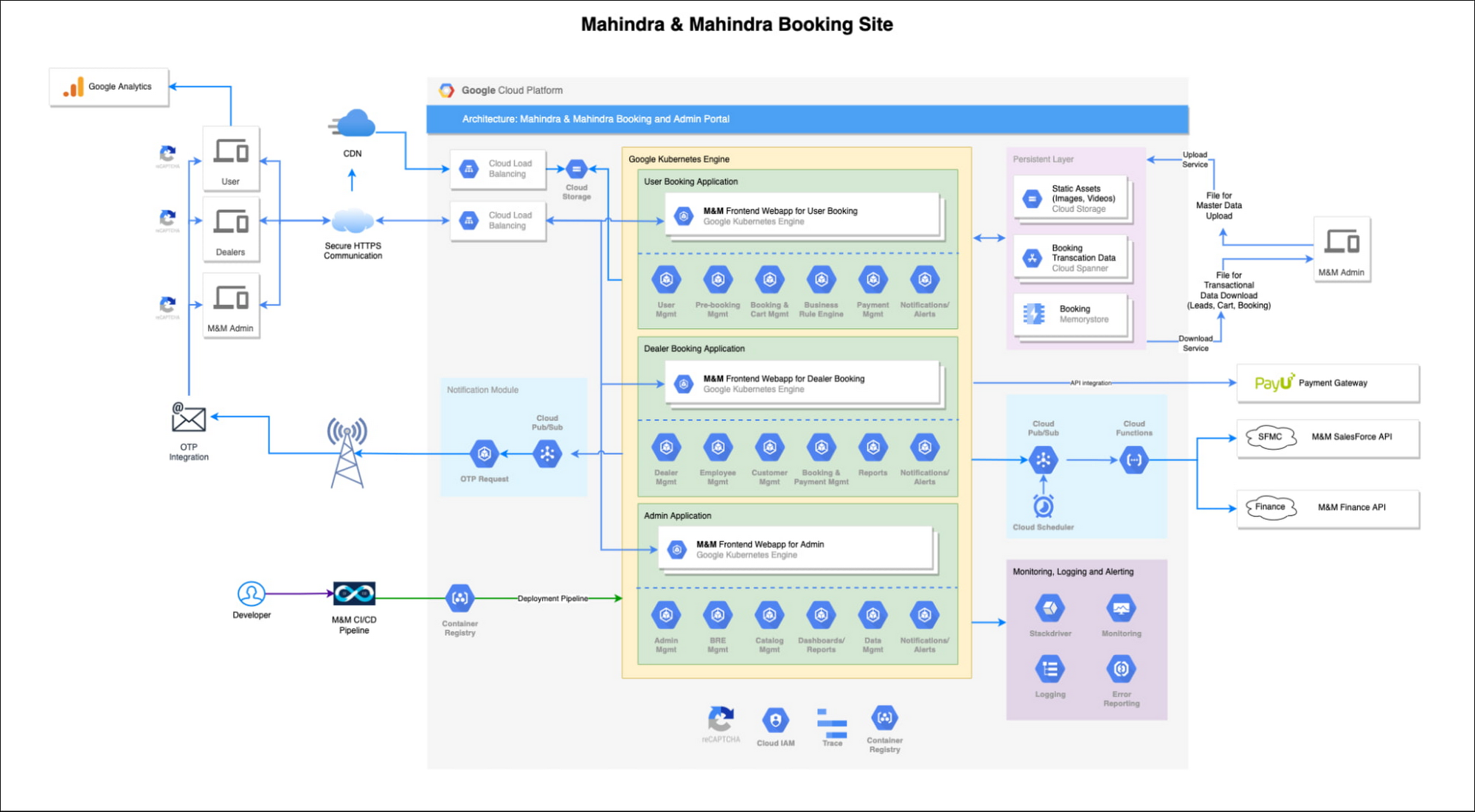

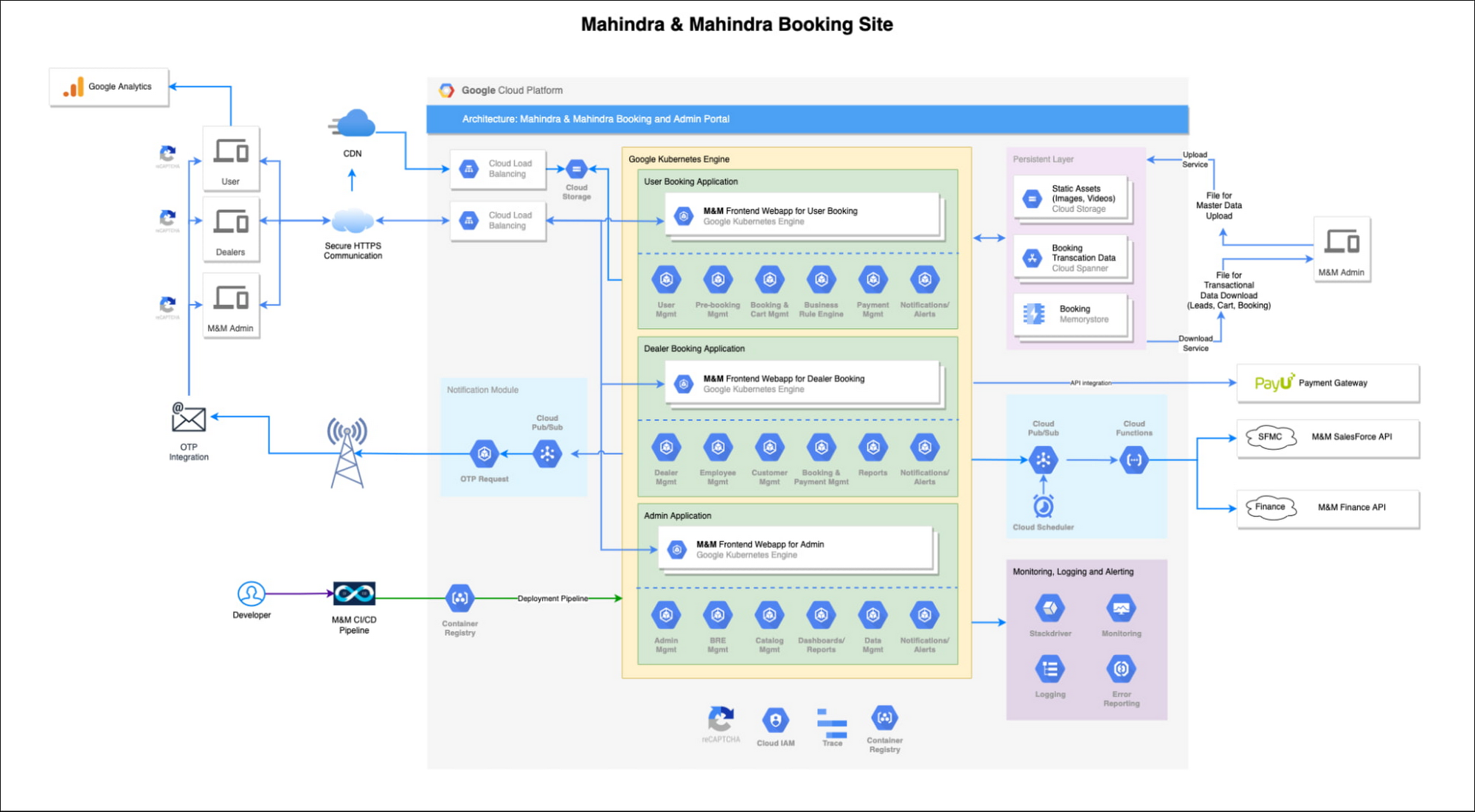

We arrived at a list of required resources about three months before the launch date and planned for resources to be reserved for our Google Cloud project. We chose to build the solution on managed platform services, which allowed us to focus on developing our solution logic rather than worrying about day-two concerns such as platform scalability and security. The core platform stack is comprised of Google Kubernetes Engine (GKE), Cloud Spanner, and Cloud Memorystore (Redis), and is supported by Container Registry, Pub/Sub, Cloud Functions, reCaptcha Enterprise, Google Analytics, and Google Cloud’s operations suite. The solution architecture is described in detail in the following section.

Architecture components

The diagram below depicts the high-level solution architecture. We had three key personas interacting with our portal: customers, dealers, and our admin team. To identify the microservices, we dissected the use cases for each of these personas and designed parameterized microservices to serve them. As solution design progressed, our microservices-based approach allowed us to quickly adapt business logic and keep up with changes suggested by our sales teams. The front-end web application was created using ReactJS as a single-page application, while the microservices were built using NodeJS and hosted on Google Kubernetes Engine (GKE).

Container orchestration with Google Kubernetes Engine

GKE provides Standard and Autopilot as two modes of operation. In addition to the features provided by the Standard mode, GKE Autopilot mode adds day-two conveniences such as Google Cloud managed nodes. We opted for GKE Standard mode, with nodes provisioned across three Google Cloud availability zones in the Mumbai Google Cloud region, as we were aware of the precise load pattern to be anticipated, and the portal was going to be relatively short-lived. OSS Istio was configured to route the traffic within the cluster, which was sitting behind a cloud load balancer, itself behind the CDN. All microservices code components were built, packaged into containers, and deployed via our corporate build platform. At peak, we had 1,200 GKE nodes in operation.

All customer notifications generated in the user flow were delivered via email and SMS/text messages. These were routed via Pub/Sub, acting as a queue, with Cloud Functions draining the queues and delivering them via partner SMS gateways and Mahindra’s email APIs. Given the importance of sending timely SMS notifications of booking confirmations, two independent third-party SMS gateway providers were used to ensure redundancy and scale. Both Pub/Sub and Cloud Functions scaled automatically to keep up with notification workload.

Data strategy

Given the need for incredibly high transaction throughput for a short burst of time, we chose Spanner as the primary datastore as it offers the best features of relational databases and scale-out performance of NoSQL databases. Using Spanner not only provided us the scale needed to store the car bookings rapidly, but also allowed the admin teams to see real-time drill-down pivots of sales performance across vehicle models, towns and dealerships, without the need for an additional analytical processing layer. Here’s how:- Spanner uniquely offers Interleaved tables that physically collocate the child table rows with the parent table row, leading to faster retrieval.

- Spanner also has a scale-out model where it automatically and dynamically partitions data across compute nodes (splits) to scale out the transaction workload.

- We were able to prevent Spanner from dynamically partitioning data during peak traffic, by pre-warming the Spanner database with representative data, and allowing it to settle before the booking window opened.

Together, these benefits ensured a quick and seamless booking and checkout process for our customers.

We chose Memorystore (Redis) to serve mostly static, master data such as models, cities/towns, and dealer lists. It also served as the primary store for user session/token tracking. Separate Memorystore clusters were provisioned for each of the above needs.

UI/UX Strategy

We kept the website in line with a lean booking process. We only had the necessary components that a customer would need to book the vehicle: 1) vehicle choice, 2) the dealership to deliver the vehicle to, 3) the customer’s personal details, and 4) payment mode.

Within the journey, we worked towards a lean system and ensured all images and other master assets were optimized for size and pushed to Cloudflare CDN, with cache enabled to reduce latency and to reduce server calls. All the static and resource files were pushed to CDN during the build process.

On the service backend side, we had around 10 microservices that were independent of each other. Each microservice was scaled proportionally to the request frequency and the data it was processing. The source code was reviewed and optimized to have fewer iterations. We made sure there were no bottlenecks in any of the microservices and had mechanisms in place to recover in case there were some failures.

Monitoring the solution

Monitoring the solution was going to be a key necessity. We anticipated that customer volume would spike when the web portal launched on a specific date and time, so the solution team required real-time operational visibility into how each component was performing. To monitor the performance of all Google Cloud services, specific Google Cloud Monitoring dashboards were developed. Custom dashboards were also developed to analyze application logs via Cloud Trace and Cloud Logging. This allowed the operations team to monitor some business metrics correlated with operations status in real time. The war room team kept track of end users' experiences by manually navigating through the main booking flow and logging in to the portal.

Finally, integration with Google Analytics gave our team almost real-time visibility to user traffic in terms of use cases, with the ability to drill down to get city/state-wise details.

Preparing for the portal launch

The team did extensive performance testing ahead of the launch. The critical parameter was to achieve low, single-digit end-user response times in seconds, for all customer requests. Given that the architecture exclusively used REST APIs and sync calls wherever possible for client-server communication, the team had to test the REST APIs to arrive at the best GKE and Spanner sizing to meet the peak performance test target of 250,000 concurrent users. Locust, an open-source performance testing tool running on an independent GKE cluster, was used to perform and monitor the stress test. Numerous configurations (e.g. min/max pod settings in GKE, Spanner indexes and interleaved storage settings, introducing MemoryStore for real-time counters, etc.) were tuned during the process. We did extensive load testing which established GKE’s and Spanner’s ability to handle the traffic spike we were expecting by a significant margin.

Transforming the SUV buying experience

In India, the traditional SUV purchasing process is offline and centered around dealerships. Pivoting to an exclusive online booking experience needed internal business process tweaks to make it simple and secure for customers and dealers to do online bookings themselves. With our deep technical partnership with Google Cloud in powering the successful Scorpio-N launch event, we feel we have influenced a shift in the SUV buying experience, where we received more than 70% of the first 25,000 booking requests directly from buyers sitting in their homes.

The Mahindra Automotive team looks forward to continuing to drive digital innovations in the Indian automotive sector with Google Cloud.