Advanced Technical

Nov 22, 2024

Hymba Hybrid-Head Architecture Boosts Small Language Model Performance

Transformers, with their attention-based architecture, have become the dominant choice for language models (LMs) due to their strong performance,...

12 MIN READ

Nov 13, 2024

Mastering LLM Techniques: Data Preprocessing

The advent of large language models (LLMs) marks a significant shift in how industries leverage AI to enhance operations and services. By automating routine...

14 MIN READ

Oct 22, 2024

Scaling LLMs with NVIDIA Triton and NVIDIA TensorRT-LLM Using Kubernetes

Large language models (LLMs) have been widely used for chatbots, content generation, summarization, classification, translation, and more. State-of-the-art LLMs...

16 MIN READ

Sep 24, 2024

Accelerating Leaderboard-Topping ASR Models 10x with NVIDIA NeMo

NVIDIA NeMo has consistently developed automatic speech recognition (ASR) models that set the benchmark in the industry, particularly those topping the Hugging...

13 MIN READ

Sep 18, 2024

Quickly Voice Your Apps with NVIDIA NIM Microservices for Speech and Translation

NVIDIA NIM, part of NVIDIA AI Enterprise, provides containers to self-host GPU-accelerated inferencing microservices for pretrained and customized AI models...

11 MIN READ

Sep 06, 2024

Using Generative AI Models in Circuit Design

Generative models have been making big waves in the past few years, from intelligent text-generating large language models (LLMs) to creative image and...

7 MIN READ

Jul 18, 2024

Accelerating Vector Search: NVIDIA cuVS IVF-PQ Part 2, Performance Tuning

In the first part of the series, we presented an overview of the IVF-PQ algorithm and explained how it builds on top of the IVF-Flat algorithm, using the...

14 MIN READ

Jul 18, 2024

Accelerating Vector Search: NVIDIA cuVS IVF-PQ Part 1, Deep Dive

In this post, we continue the series on accelerating vector search using NVIDIA cuVS. Our previous post in the series introduced IVF-Flat, a fast algorithm for...

14 MIN READ

Jun 28, 2024

Introducing DoRA, a High-Performing Alternative to LoRA for Fine-Tuning

Full fine-tuning (FT) is commonly employed to tailor general pretrained models for specific downstream tasks. To reduce the training cost, parameter-efficient...

6 MIN READ

Jun 14, 2024

Level Up Your Skills with Five New NVIDIA Technical Courses

With AI introducing an unprecedented pace of technological innovation, staying ahead means keeping your skills up to date. The NVIDIA Developer Program gives...

4 MIN READ

Jun 12, 2024

NVIDIA Sets New Generative AI Performance and Scale Records in MLPerf Training v4.0

Generative AI models have a variety of uses, such as helping write computer code, crafting stories, composing music, generating images, producing videos, and...

11 MIN READ

Apr 23, 2024

Webinar: Enhance LLMs with RAG and Accelerate Enterprise AI with Pure Storage and NVIDIA

Join Pure Storage and NVIDIA on April 25 to discover the benefits of enhancing LLMs with RAG for enterprise-scale generative AI applications.

1 MIN READ

Apr 22, 2024

Advancing Cell Segmentation and Morphology Analysis with NVIDIA AI Foundation Model VISTA-2D

Genomics researchers use different sequencing techniques to better understand biological systems, including single-cell and spatial omics. Unlike single-cell,...

7 MIN READ

Apr 02, 2024

Tune and Deploy LoRA LLMs with NVIDIA TensorRT-LLM

Large language models (LLMs) have revolutionized natural language processing (NLP) with their ability to learn from massive amounts of text and generate fluent...

15 MIN READ

Mar 27, 2024

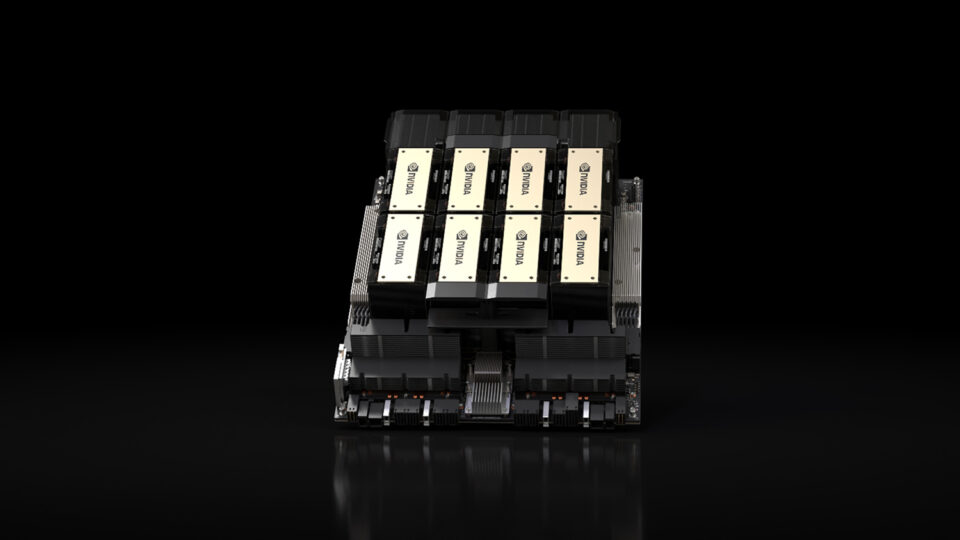

NVIDIA H200 Tensor Core GPUs and NVIDIA TensorRT-LLM Set MLPerf LLM Inference Records

Generative AI is unlocking new computing applications that greatly augment human capability, enabled by continued model innovation. Generative AI...

11 MIN READ

Mar 21, 2024

Rethinking How to Train Diffusion Models

After exploring the fundamentals of diffusion model sampling, parameterization, and training as explained in Generative AI Research Spotlight: Demystifying...

15 MIN READ