Components of Apache Spark

Last Updated :

28 Oct, 2022

Spark is a cluster computing system. It is faster as compared to other cluster computing systems (such as Hadoop). It provides high-level APIs in Python, Scala, and Java. Parallel jobs are easy to write in Spark. In this article, we will discuss the different components of Apache Spark.

Spark processes a huge amount of datasets and it is the foremost active Apache project of the current time. Spark is written in Scala and provides API in Python, Scala, Java, and R. The most vital feature of Apache Spark is its in-memory cluster computing that extends the speed of the data process. Spark is an additional general and quicker processing platform. It helps us to run programs relatively quicker than Hadoop (i.e.) a hundred times quicker in memory and ten times quicker even on the disk. The main features of spark are:

- Multiple Language Support: Apache Spark supports multiple languages; it provides API’s written in Scala, Java, Python or R. It permits users to write down applications in several languages.

- Quick Speed: The most vital feature of Apache Spark is its processing speed. It permits the application to run on a Hadoop cluster, up to one hundred times quicker in memory, and ten times quicker on disk.

- Runs Everywhere: Spark will run on multiple platforms while not moving the processing speed. It will run on Hadoop, Kubernetes, Mesos, Standalone, and even within the Cloud.

- General Purpose: It is powered by plethora libraries for machine learning (i.e.) MLlib, DataFrames, and SQL at the side of Spark Streaming and GraphX. It is allowed to use a mix of those libraries which are coherently associated with the application. The feature of mix streaming, SQL, and complicated analytics, within the same application, makes Spark a general framework.

- Advanced Analytics: Apache Spark also supports “Map” and “Reduce” that has been mentioned earlier. However, at the side of MapReduce, it supports Streaming data, SQL queries, Graph algorithms, and Machine learning. Thus, Apache Spark may be used to perform advanced analytics.

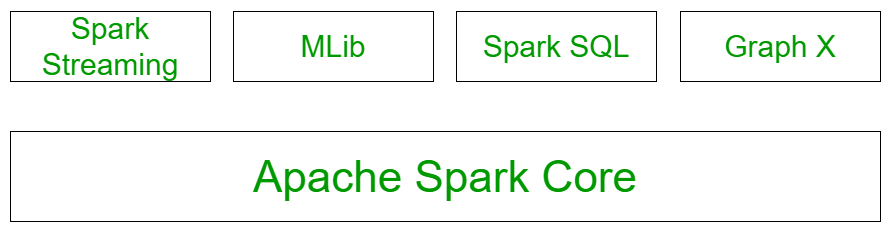

Components of Spark:  The above figure illustrates all the spark components. Let’s understand each of the components in detail:

The above figure illustrates all the spark components. Let’s understand each of the components in detail:

- Spark Core: All the functionalities being provided by Apache Spark are built on the highest of the Spark Core. It delivers speed by providing in-memory computation capability. Spark Core is the foundation of parallel and distributed processing of giant dataset. It is the main backbone of the essential I/O functionalities and significant in programming and observing the role of the spark cluster. It holds all the components related to scheduling, distributing and monitoring jobs on a cluster, Task dispatching, Fault recovery. The functionalities of this component are:

- It contains the basic functionality of spark. (Task scheduling, memory management, fault recovery, interacting with storage systems).

- Home to API that defines RDDs.

- Spark SQL Structured data: The Spark SQL component is built above the spark core and used to provide the structured processing on the data. It provides standard access to a range of data sources. It includes Hive, JSON, and JDBC. It supports querying data either via SQL or via the hive language. This also works to access structured and semi-structured information. It also provides powerful, interactive, analytical application across both streaming and historical data. Spark SQL could be a new module in the spark that integrates the relative process with the spark with programming API. The main functionality of this module is:

- It is a Spark package for working with structured data.

- It Supports many sources of data including hive tablets, parquet, json.

- It allows the developers to intermix SQK with programmatic data manipulation supported by RDDs in python, scala and java.

- Spark Streaming: Spark streaming permits ascendible, high-throughput, fault-tolerant stream process of live knowledge streams. Spark can access data from a source like a flume, TCP socket. It will operate different algorithms in which it receives the data in a file system, database and live dashboard. Spark uses Micro-batching for real-time streaming. Micro-batching is a technique that permits a method or a task to treat a stream as a sequence of little batches of information. Hence spark streaming groups the live data into small batches. It delivers it to the batch system for processing. The functionality of this module is:

- Enables processing of live streams of data like log files generated by production web services.

- The API’s defined in this module are quite similar to spark core RDD API’s.

- Mllib Machine Learning: MLlib in spark is a scalable Machine learning library that contains various machine learning algorithms. The motive behind MLlib creation is to make the implementation of machine learning simple. It contains machine learning libraries and the implementation of various algorithms. For example, clustering, regression, classification and collaborative filtering.

- GraphX graph processing: It is an API for graphs and graph parallel execution. There is network analytics in which we store the data. Clustering, classification, traversal, searching, and pathfinding is also possible in the graph. It generally optimizes how we can represent vertex and edges in a graph. GraphX also optimizes how we can represent vertex and edges when they are primitive data types. To support graph computation, it supports fundamental operations like subgraph, joins vertices, and aggregate messages as well as an optimized variant of the Pregel API.

Uses of Apache Spark: The main applications of the spark framework are:

- The data generated by systems aren’t consistent enough to mix for analysis. To fetch consistent information from systems we will use processes like extract, transform and load and it reduces time and cost since they are very efficiently implemented in spark.

- It is tough to handle the time generated data like log files. Spark is capable enough to work well with streams of information and reuse operations.

- As spark is capable of storing information in memory and might run continual queries quickly, it makes it straightforward to figure out the machine learning algorithms that can be used for a particular kind of data.