Factors affecting Cache Memory Performance

Last Updated :

11 Jan, 2021

Computers are made of three primary blocs. A CPU, a memory, and an I/O system. The performance of a computer system is very much dependent on the speed with which the CPU can fetch instructions from the memory and write to the same memory. Computers are using cache memory to bridge the gap between the processor’s ability to execute instructions and the time it takes to fetch operations from main memory.

Time taken by a program to execute with a cache depends on

- The number of instructions needed to perform the task.

- The average number of CPU cycles needed to perform the desired task.

- The CPU’s cycle time.

While Engineering any product or feature the generic structure of the device remains the same what changes the specific part of the device which needs to be optimized because of client requirements. How does an engineer go about improving the design? Simple we start by making a mathematical model connecting the inputs to the outputs.

Execution Time = Instruction Count x Cycles per Instruction x Cycle Time

=Instruction Count x (CPU Cycles per Instr. + Memory Cycles per Instr.) x Cycle Time

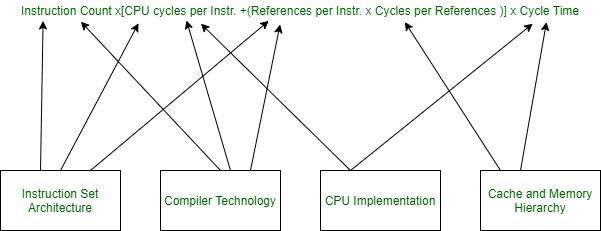

=Instruction Count x [CPU Cycles per Instr. +(References per Instr. x Cycles per References)] x Cycle Time

These four boxes represent four major pain points that can be addressed to have a significant performance change either positive or negative on the machine. The first element of the equation the number of instructions needed to perform a function is dependent on the instruction set architecture and is the same across all implementations. It is also dependent on the compiler’s design to produce efficient code. Optimizing compilers to execute functions with fewer executed instructions is desired.

CPU cycles per instructions are also dependent on compiler optimizations as the compiler can be made to choose instructions that are less CPU intensive and have a shorter path length. Pipelining instructions efficiently also improve this parameter which makes instructions maximize hardware resource optimization.

The average number of memory references per instruction and the average number of cycles per memory reference combine to form the average number of cycles per instruction. The former is a function of architecture and instruction selection algorithms of the compiler. This is constant across implementations of the architecture.

Instruction Set Architecture :

- Reduced Instruction Set Computer (RISC) –

Reduced Instruction Set Computer (RISC) is one of the most popular instruction set. This is used by ARM processors and those are one of the most widely used chips for products.

- Complex Instruction Set Computer (CISC) –

Complex Instruction Set Computer (CISC) is an instruction set architecture for the very specialized operation which has been researched and studied upon so thoroughly that even the processor microarchitecture is built for that specific purpose only.

- Minimal instruction set computers (MISC) –

Minimal instruction set computers (MISC) the 8085 may be considered in this category compared to modern processors.

- Explicitly parallel instruction computing (EPIC) –

Explicitly parallel instruction computing (EPIC) is an instruction set that is widely used in supercomputers.

- One instruction set computer (OISC) –

One instruction set computer (OISC) uses assembly only.

- Zero instruction set computer (ZISC) –

This is a neural network on a computer.

Compiler Technology :

- Single Pass Compiler –

This source code is directly converted into machine code.

- Two-Pass Compiler –

Source code is converted onto an intermediate representation which is converted into machine code.

- Multipass Compiler –

In this source code is converted into intermediate code from the front end then it is converted into intermediate code after the middle-end then passed to the back end which is converted into machine code.

CPU Implementation :

The micro-architecture is dependent upon the design philosophy and methodology of the Engineers involved in the process. Take a simple example of making a circuit to take input from a common jack passing it through an amplifier then storing the data in a buffer.

Two approaches can be taken to solve the problem which is either putting a buffer in the beginning and putting two amplifiers and bypassing the current through either which would make sense if two different types of signals are supposed to be amplified or if there is a slight difference in the saturation region of the amplifiers. Or we could make a common current path and introduce a temporal dependence upon the buffer in which data is stored thereby eliminating the need for buffers altogether.

Minute differences like these in the VLSI microarchitecture of the processor create massive timing differences in the same Instruction Set Implementations by two different companies.

Cache and Memory Hierarchy :

This is again dependent upon the use case for which the system was built. Using a general-purpose computer also called a Personal Computer which can perform a wide variety of mathematical calculations and produce wide results but reasonably accurate for non-real-time systems in a hard real-time system will be very unwise.

A very big difference will be the time taken to access data in the cache.

A simple experiment may be run on your computer whereby you may find the cache size of your particular model of processor and try to access elements of an array around that array a massive speed down will be observed while trying to access an array greater than the cache size.

Similar Reads

Factors affecting Cache Memory Performance

Computers are made of three primary blocs. A CPU, a memory, and an I/O system. The performance of a computer system is very much dependent on the speed with which the CPU can fetch instructions from the memory and write to the same memory. Computers are using cache memory to bridge the gap between t

5 min read

Cache Memory Performance

Types of Caches : L1 Cache : Cache built in the CPU itself is known as L1 or Level 1 cache. This type of cache holds most recent data so when, the data is required again so the microprocessor inspects this cache first so it does not need to go through main memory or Level 2 cache. The main significa

5 min read

Difference between Virtual memory and Cache memory

Virtual Memory and Cache Memory are important substructures of contemporary computing systems that perform an important function in terms of enhancing capabilities. But they are dissimilar in terms of functionality and function differently. Virtual memory works as extra physical memory of the system

5 min read

Cache Memory in Computer Organization

Cache memory is a small, high-speed storage area in a computer. The cache is a smaller and faster memory that stores copies of the data from frequently used main memory locations. There are various independent caches in a CPU, which store instructions and data. The most important use of cache memory

8 min read

Difference between Cache Memory and Register

In the context of a computer’s architecture, two terms that may be familiar are the cache memory and the register, however, few people may not know the differences and functions of each of the two components. Both of them are important to the CPU but they have different roles and are used in differe

5 min read

RAM Full Form - Random Access Memory

RAM stands for Random Access Memory. RAM is used to read and write into memory. RAM stores files and data of programs that are currently being executed by the CPU. It is a volatile memory as data is lost when power is turned off. RAM can be further divided into two classifications Static RAM (SRAM),

7 min read

Performance of paging

Introduction: Paging is a memory management technique used in operating systems to divide a process's virtual memory into fixed-sized pages. The performance of paging depends on various factors, such as: Page size: The larger the page size, the less the number of page tables required, which can resu

4 min read

Analytical Approach to optimize Multi Level Cache Performance

Prerequisite - Multilevel Cache OrganizationThe execution time of a program is the product of the total number of CPU cycles needed to execute a program. For a memory system with a single level of caching, the total cycle count is a function of the memory speed and the cache miss ratio.m(C) = f(S, C

3 min read

Concept of Cache Memory Design

Cache Memory plays a significant role in reducing the processing time of a program by provide swift access to data/instructions. Cache memory is small and fast while the main memory is big and slow. The concept of caching is explained below. Caching Principle : The intent of cache memory is to provi

4 min read

Levels of Memory in Operating System

Memory hierarchy of a computer system it handles differences in speed. "Hierarchy" is a great way to say "order of thinks" like top to bottom, fast to slow, most important to least important. If you look at the memory hierarchy inside the computer, according to the fastest to the slowest: 1. CPU Reg

3 min read

Performance of Computer in Computer Organization

In computer organization, performance refers to the speed and efficiency at which a computer system can execute tasks and process data. A high-performing computer system is one that can perform tasks quickly and efficiently while minimizing the amount of time and resources required to complete these

6 min read

Differences between Associative and Cache Memory

Memory system is the only subsystem of computer architecture that contributes a lot to the speed and efficiency of result processing. Among different types of memory that can be mentioned in discussions, associative and cache memories play crucial roles. Because associative memory is also called con

5 min read

Different Types of RAM (Random Access Memory )

In the computer world, memory plays an important component in determining the performance and efficiency of a system. In between various types of memory, Random Access Memory (RAM) stands out as a necessary component that enables computers to process and store data temporarily. In this article, we w

8 min read

Cache Coherence

Prerequisite - Cache Memory Cache coherence : In a multiprocessor system, data inconsistency may occur among adjacent levels or within the same level of the memory hierarchy. In a shared memory multiprocessor with a separate cache memory for each processor, it is possible to have many copies of any

4 min read

Memory Organisation in Computer Architecture

The memory is organized in the form of a cell, each cell is able to be identified with a unique number called address. Each cell is able to recognize control signals such as “read” and “write”, generated by CPU when it wants to read or write address. Whenever CPU executes the program there is a need

2 min read

Performance of 2-level Paging

INTRODUCTION: Two-level paging is a hierarchical paging technique that divides the virtual address space into two levels of page tables: the top-level page table and the second-level page table. The top-level page table maps virtual memory addresses to second-level page tables, while the second-leve

5 min read

Random Access Memory (RAM) and Read Only Memory (ROM)

Memory is a fundamental component of computing systems, essential for performing various tasks efficiently. It plays a crucial role in how computers operate, influencing speed, performance, and data management. In the realm of computer memory, two primary types stand out: Random Access Memory (RAM)

8 min read

Memory Access Methods

These are 4 types of memory access methods: 1. Sequential Access:- In this method, the memory is accessed in a specific linear sequential manner, like accessing in a single Linked List. The access time depends on the location of the data. Applications of this sequential memory access are magnetic ta

2 min read

Overlays in Memory Management

In memory management, overlays refer to a technique used to manage memory efficiently by overlaying a portion of memory with another program or data. The idea behind overlays is to only load the necessary parts of a program into memory at a given time, freeing up memory for other tasks. The unused p

5 min read