What are Torch Scripts in PyTorch?

Last Updated :

06 Sep, 2024

TorchScript is a powerful feature in PyTorch that allows developers to create serializable and optimizable models from PyTorch code. It serves as an intermediate representation of a PyTorch model that can be run in high-performance environments, such as C++, without the need for a Python runtime. This capability is crucial for deploying models in production environments where Python might not be available or desired.

What is TorchScript?

TorchScript is essentially a subset of the Python language that is specifically designed to work with PyTorch models. It allows for the conversion of PyTorch models into a format that can be executed independently of Python. This conversion is achieved through two primary methods: tracing and scripting.

- Tracing: This method involves running a model with example inputs and recording the operations performed. It captures the model's operations in a way that can be replayed later. However, tracing can miss dynamic control flows like loops and conditional statements because it only records the operations executed with the given inputs.

- Scripting: This method involves converting the model's source code into TorchScript. It inspects the code and compiles it into a form that can be executed by the TorchScript runtime. Scripting is more flexible than tracing as it can handle dynamic control flows, but it requires the code to be compatible with TorchScript's subset of Python.

Key Features of Torch Script

Torch Script brings several advantages to PyTorch models:

- Performance Improvements: Torch Script allows for optimizations that are hard to achieve in the standard eager execution mode.

- Compatibility: Once a model is converted to Torch Script, it can be executed in C++ without requiring Python, making it ideal for production deployment.

- Cross-platform Deployment: Torch Script models can be deployed across various platforms such as mobile, edge devices, and cloud environments.

- Serialization: Models in Torch Script can be serialized, allowing for easy sharing and deployment.

How to Use Torch Script in PyTorch

Torch Script can be utilized in two ways: tracing and scripting. Both approaches generate the same underlying Torch Script, but they differ in how they interact with your PyTorch model.

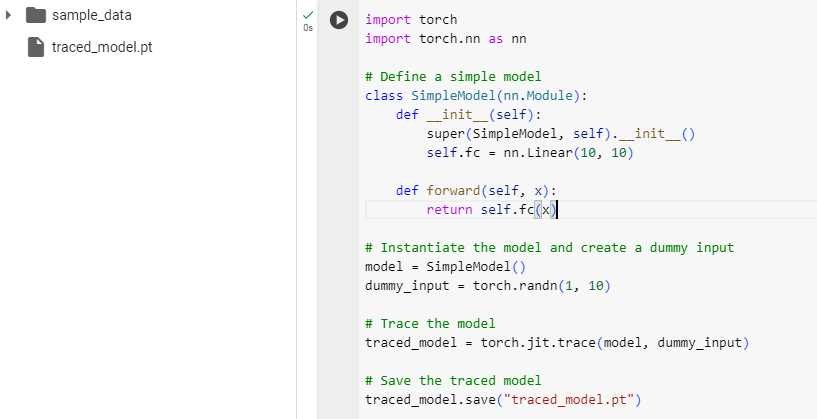

Tracing with Torch Script

Tracing is one of the ways to convert a PyTorch model to Torch Script. In tracing, PyTorch records the operations performed during a forward pass and constructs a computation graph based on this. Here’s how to trace a simple model:

Python

import torch

import torch.nn as nn

# Define a simple model

class SimpleModel(nn.Module):

def __init__(self):

super(SimpleModel, self).__init__()

self.fc = nn.Linear(10, 10)

def forward(self, x):

return self.fc(x)

# Instantiate the model and create a dummy input

model = SimpleModel()

dummy_input = torch.randn(1, 10)

# Trace the model

traced_model = torch.jit.trace(model, dummy_input)

# Save the traced model

traced_model.save("traced_model.pt")

Output:

Tracing with Torch Script

Tracing with Torch ScriptScripting with Torch Script

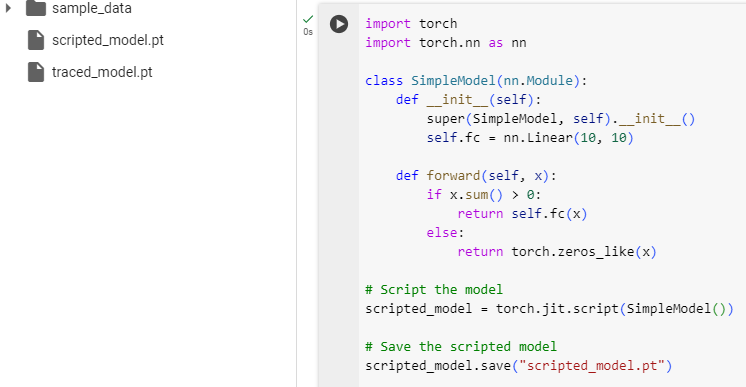

While tracing works well for many models, it has limitations, particularly with control flows like loops and conditionals. For these cases, scripting is the preferred method. Scripting directly converts the entire PyTorch module into Torch Script. Here’s an example of scripting:

Python

import torch

import torch.nn as nn

class SimpleModel(nn.Module):

def __init__(self):

super(SimpleModel, self).__init__()

self.fc = nn.Linear(10, 10)

def forward(self, x):

if x.sum() > 0:

return self.fc(x)

else:

return torch.zeros_like(x)

# Script the model

scripted_model = torch.jit.script(SimpleModel())

# Save the scripted model

scripted_model.save("scripted_model.pt")

Output:

Scripting with Torch Script

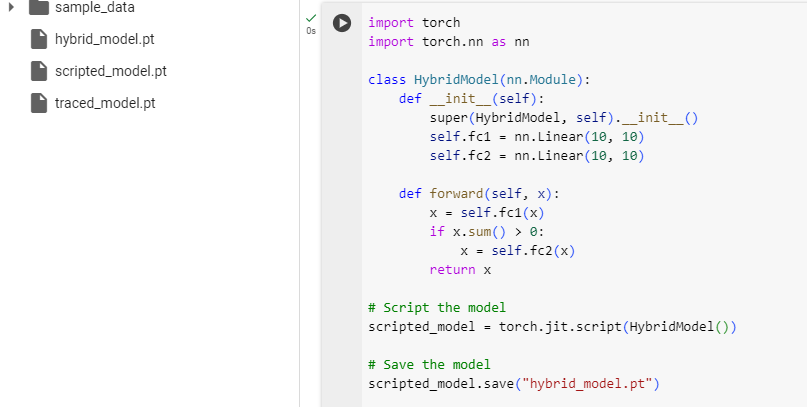

Scripting with Torch ScriptCombining Tracing and Scripting

In some cases, you might want to mix tracing and scripting to leverage the benefits of both. For instance, tracing can be used for portions of the model that are static, while scripting can handle dynamic portions like control flow.

Python

import torch

import torch.nn as nn

class HybridModel(nn.Module):

def __init__(self):

super(HybridModel, self).__init__()

self.fc1 = nn.Linear(10, 10)

self.fc2 = nn.Linear(10, 10)

def forward(self, x):

x = self.fc1(x)

if x.sum() > 0:

x = self.fc2(x)

return x

# Script the model

scripted_model = torch.jit.script(HybridModel())

# Save the model

scripted_model.save("hybrid_model.pt")

Output:

Combining Tracing and Scripting

Combining Tracing and ScriptingCommon Errors with Torch Scripts in PyTorch

- Control Flow Issues: When using tracing, control flow statements like if or for loops can cause issues because tracing only captures one path of execution. Switching to scripting can resolve these issues.

- Unsupported Operations: Not all PyTorch operations are supported in TorchScript. Ensuring that the model's code adheres to the supported subset of Python is crucial.

When to Use TorchScript

- TorchScript is particularly useful in scenarios where performance is critical or when deploying models in environments without Python.

- It is also beneficial when models need to be integrated into larger systems written in other programming languages.

- However, not all PyTorch models can be easily converted to TorchScript, especially those relying heavily on Python-specific features not supported by TorchScript

Conclusion

TorchScript is a powerful tool for deploying PyTorch models in high-performance environments. By understanding the differences between tracing and scripting, and following best practices for conversion and optimization, users can leverage TorchScript to achieve efficient and scalable model deployment. Whether you are deploying models on servers, mobile devices, or other platforms, TorchScript provides the flexibility and performance needed to meet your deployment requirements.

Similar Reads

What are Torch Scripts in PyTorch?

TorchScript is a powerful feature in PyTorch that allows developers to create serializable and optimizable models from PyTorch code. It serves as an intermediate representation of a PyTorch model that can be run in high-performance environments, such as C++, without the need for a Python runtime. Th

5 min read

What Is the Relationship Between PyTorch and Torch?

The landscape of deep learning frameworks has evolved significantly over the years, with various libraries emerging to cater to different needs and preferences. Two prominent frameworks in this domain are PyTorch and Torch, which, despite their similarities in name, have distinct origins, functional

6 min read

What is "with torch no_grad" in PyTorch?

In this article, we will discuss what does with a torch.no_grad() method do in PyTorch. torch.no_grad() method With torch.no_grad() method is like a loop in which every tensor in that loop will have a requires_grad set to False. It means that the tensors with gradients currently attached to the curr

3 min read

How to compare two tensors in PyTorch?

In this article, we are going to see how we can compare two tensors in Pytorch. We can compare two tensors by using the torch.eq() method. This method compares the corresponding elements of tensors. It has to return rue at each location where both tensors have equal value else it will return false.

2 min read

Way to Copy a Tensor in PyTorch

In deep learning, PyTorch has become a popular framework for building and training neural networks. At the heart of PyTorch is the tensor—a multi-dimensional array that serves as the fundamental building block for all operations in the framework. There are many scenarios where you might need to copy

5 min read

How to resize a tensor in PyTorch?

In this article, we will discuss how to resize a Tensor in Pytorch. Resize allows us to change the size of the tensor. we have multiple methods to resize a tensor in PyTorch. let's discuss the available methods. Method 1: Using view() method We can resize the tensors in PyTorch by using the view() m

5 min read

What is PyTorch Ignite?

PyTorch Ignite is a high-level library designed to simplify the process of training and evaluating neural networks using PyTorch. It provides a flexible and transparent framework that allows developers to focus on building models rather than dealing with the complexities of the training process. Thi

7 min read

Creating a Tensor in Pytorch

All the deep learning is computations on tensors, which are generalizations of a matrix that can be indexed in more than 2 dimensions. Tensors can be created from Python lists with the torch.tensor() function. The tensor() Method: To create tensors with Pytorch we can simply use the tensor() method:

6 min read

How to Slice a 3D Tensor in Pytorch?

In this article, we will discuss how to Slice a 3D Tensor in Pytorch. Let's create a 3D Tensor for demonstration. We can create a vector by using torch.tensor() function Syntax: torch.tensor([value1,value2,.value n]) Code: C/C++ Code # import torch module import torch # create an 3 D tensor with 8 e

2 min read

Change view of Tensor in PyTorch

In this article, we will learn how to change the shape of tensors using the PyTorch view function. We will also look at the multiple ways in which we can change the shape of the tensors. Also, we can use the view function to convert lower-dimensional matrices to higher dimensions. What is the necess

3 min read