An AI PC is the next big thing in PCs…or so a lot of companies would have you believe. But what is an AI PC, why should you buy one, and what — if anything — does an AI PC offer? While those answers are evolving over time, we can tell you what we know right now.

The short answer: Microsoft appears to have a definition of an “AI PC” that it’s working to define behind the scenes. But it’s very possible that you own an “AI-capable PC” right now. The difference between the two may be a list of specifications and maybe even a dedicated sticker to grace your laptop.

When the industry talks about an “AI PC,” it refers to a PC that can process AI tasks faster. But vendors understand that in different ways. Some, like Microsoft, appear to define it as a PC that can access “AI” like Windows Copilot, which currently lives natively in the cloud. Chip vendors like AMD, Intel, and Qualcomm feel strongly that AI should be processed locally, via its microprocessors, on your PC. And PC vendors? We’d like to think that they’ll choose any approach that nets them more sales.

So which is it? All three perspectives, actually. “AI PCs” have evolved from a nebulous concept, but Microsoft has a specific definition in mind that’s reminiscent of the Windows 11 hardware requirements. Let’s walk through where AI PCs came from, and where they’re going.

What AI is, and what it does

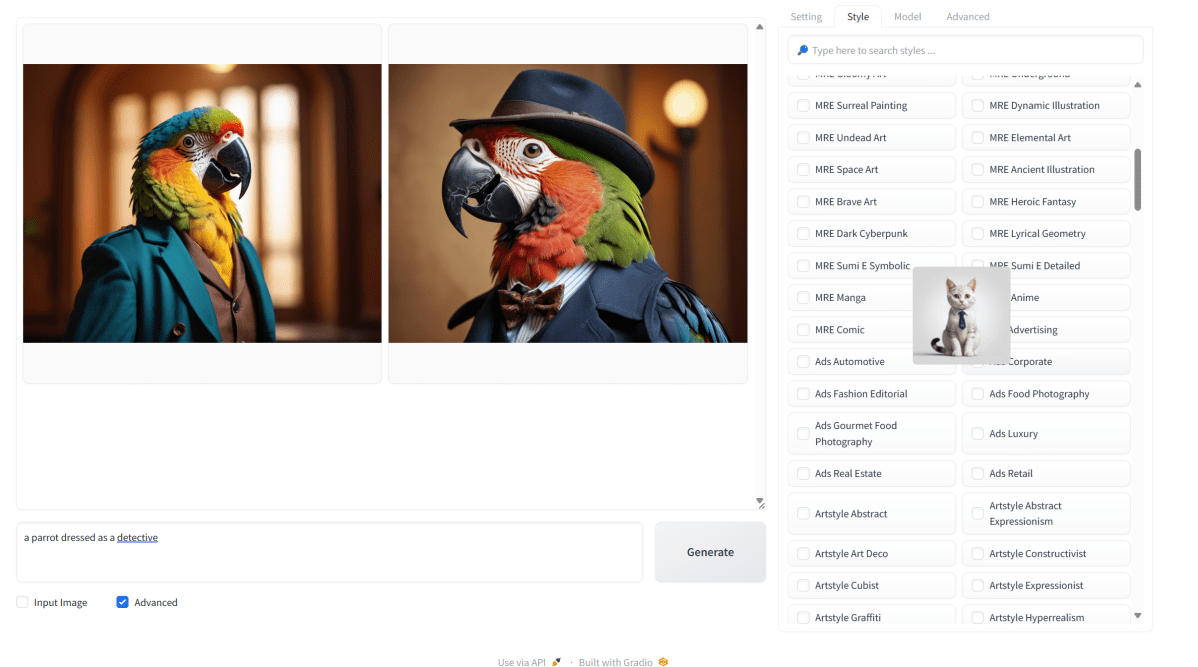

The simplest way to run AI is in the cloud: LLMs (AI chatbots) like Microsoft Copilot, AI art like the Bing Image Creator (now part of Copilot and Microsoft Designer), AI video from Adobe and Runway, and AI music like Udio. In this format, AI is just another website that you can interact with. You can do that with your PC. You can do that with your phone.

Mark Hachman / IDG

This is where the concept of an “AI PC” becomes more PC-centric. If you work for a business, your IT department is probably paranoid about confidential information leaking on to the Internet. While you might ask Copilot for tips on how to save for your retirement, your company doesn’t want the possibility of Google or Microsoft learning about its plans. So if it chooses an LLM, it might want to run it within the protection of its corporate firewall. Likewise, you might want to experiment with AI art or ask an embarrassing question of an LLM that you don’t want recorded in the cloud. Privacy matters.

Local AI advocates also say that running AI locally may be faster than the cloud. In our experience, that’s not necessarily true. OpenAI, Bard, and Microsoft have spent considerable time and effort making sure their cloud AI is fast and responsive.

The final argument is that a local LLM can be “trained” to know you and your specific preferences and actions.

Mark Hachman / IDG

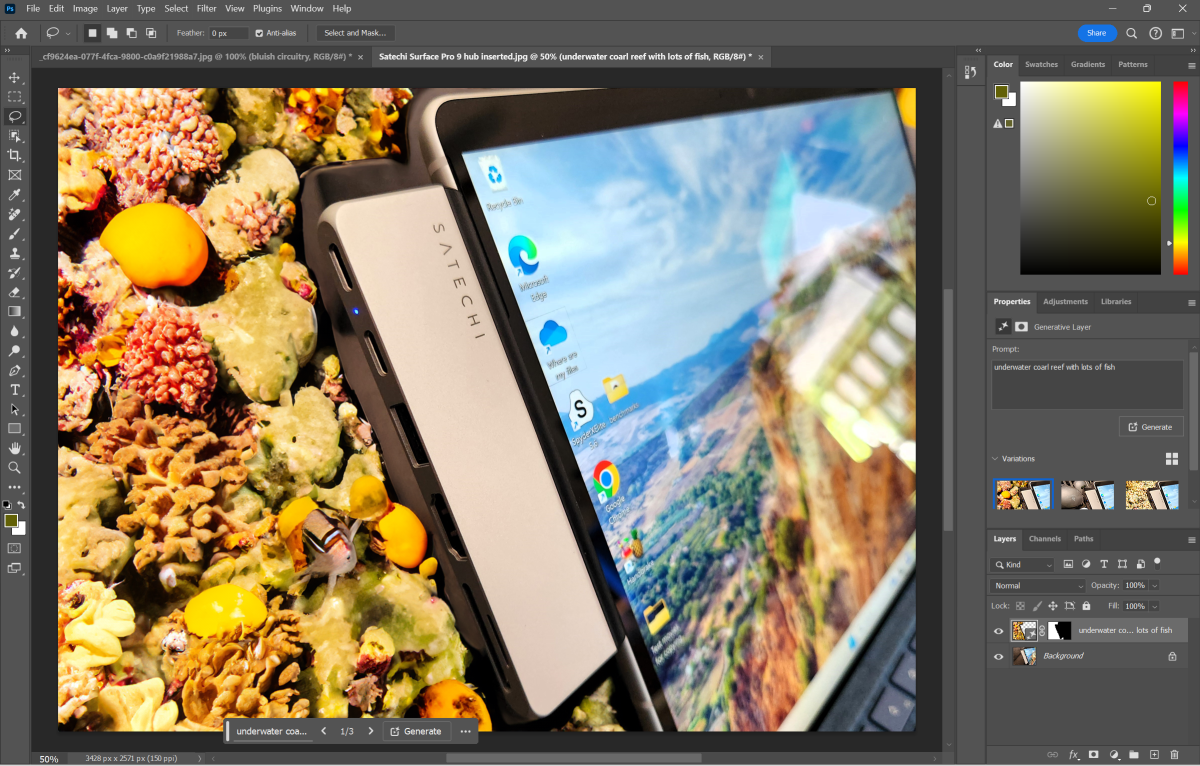

Applications can strike a balance. Photoshop is a good example, as it runs “AI” tools like the ability to remove objects from an image on your PC. But if you want to change a king’s crown to a baseball cap, for example, Adobe’s own cloud-based Firefly service performs those tasks. We’re always on the lookout for new AI apps that can justify local AI processing, as these will make or break AI PCs.

You probably already own an AI PC

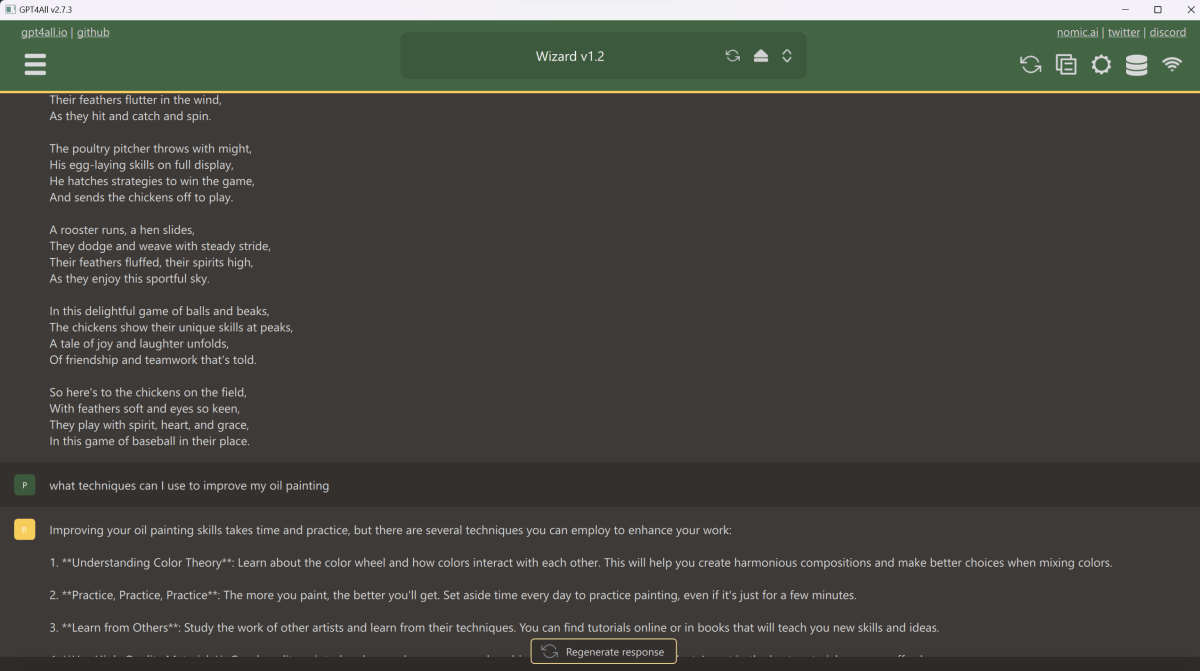

As I noted earlier, most AI can be run through the cloud. But when you run AI locally on your PC, you have three (and maybe four) options.

A PC has three main processing engines: the CPU, the GPU, and a new addition, the NPU. CPUs and GPUs (or graphics cards) have existed for decades, and they’re just fine for running local AI art and LLMs — really! Running a local LLM on just your CPU is slow, but add in a dedicated GPU and performance dramatically speeds up. In terms of AI, the GPU is still the fastest local processor you’ll own, but it’s not the most efficient.

Put another way: if you own a gaming PC with a dedicated GPU, you already own an AI-capable PC.

Now, existing and upcoming processors have NPUs inside. Intel’s Meteor Lake/Core Ultra processor will compete with the AMD Ryzen 8000 series. Qualcomm’s Snapdragon X Elite is waiting in the wings, with a number of laptops scheduled to ship by midyear. Intel already claims that it’s shipped 5 million AI PCs, with about 100 million due to ship by the end of the year.

Mark Hachman / IDG

What chip vendors would like to sell you on, though, is the concept of a Neural Processing Unit, or NPU. An NPU (ideally) is the heart of an AI PC. It’s actually conceptually similar to a GPU, in that it can perform repetitive tasks, quickly. In fact, that’s where the defining “speed” metric of an NPU comes in: TOPS, or trillions of operations per second.

We think of how fast CPUs are in terms of gigahertz: one billion hertz (or cycles) per second. NPUs are defined in TOPS, churning through trillions of operations per second. In a LLM, the speed of an NPU is also defined in tokens: the basic data units. One token generally equates to about four characters of text, so the “answers” an LLM provides are actually tokens, in AI-speak.

The effectiveness of the AI depends on how quickly and in what volume an LLM can process tokens, as well as how complex the AI model is. If the LLM runs on your machine, how fast it is in TOPS determines how quickly the LLM can spit out an answer.

Mark Hachman / IDG

Chip vendors typically define TOPS in terms of their NPU, or sometimes as their NPU, GPU, and CPU working together. I actually haven’t seen any application that can use all three in conjunction, but I’m sure those days are coming.

Here are the TOPS each of the big three PC chip vendors’ NPUs provides:

- Intel Core Ultra (Meteor Lake): 34 TOPS

- AMD Ryzen 8040 Series: 39 TOPS

- Qualcomm Snapdragon X Elite: 45 TOPS (75 total)

Intel said at CES that its upcoming Lunar Lake processor will deliver three times the AI performance (102 TOPS, presumably). That mobile chip is due before the end of the year, and will likely be called the Core Ultra Series 2.

Intel

Nvidia doesn’t release the performance of its PC GPUs in TOPS, but a GeForce RTX 4090 delivers between 83 to 100 TFLOPS, or trillions of floating-point operations. That’s generally considered to be more intensive than the “standard” integer operations used in TOPS.

There’s a wild card, too: AI accelerator cards, which we first saw earlier this year. Will they make a splash? We don’t know yet.

What’s an NPU good for in an AI PC?

Most enthusiasts think of chips in terms of performance: how fast is it? At least for now, NPUs are being positioned in terms of efficiency: how much AI performance can they deliver while minimizing power?

In this context, vendors are thinking about laptops running on battery. And right now the flagship NPU task is Windows Studio Effects.

Mark Hachman / IDG

Windows Studio Effects are Microsoft’s AI tools for improving Teams video calls. They consist of the ability to replace or blur your background; audio filtering; using the camera’s cropping ability to “pan and zoom” on your face as you move around, and a kind of creepy AI technique of making it seem like your eyes are always on the camera and paying attention.

By offloading that task to the efficient NPU, AI can keep your laptop on and powered up for a marathon day of video calls. Hurray for progress, am I right?

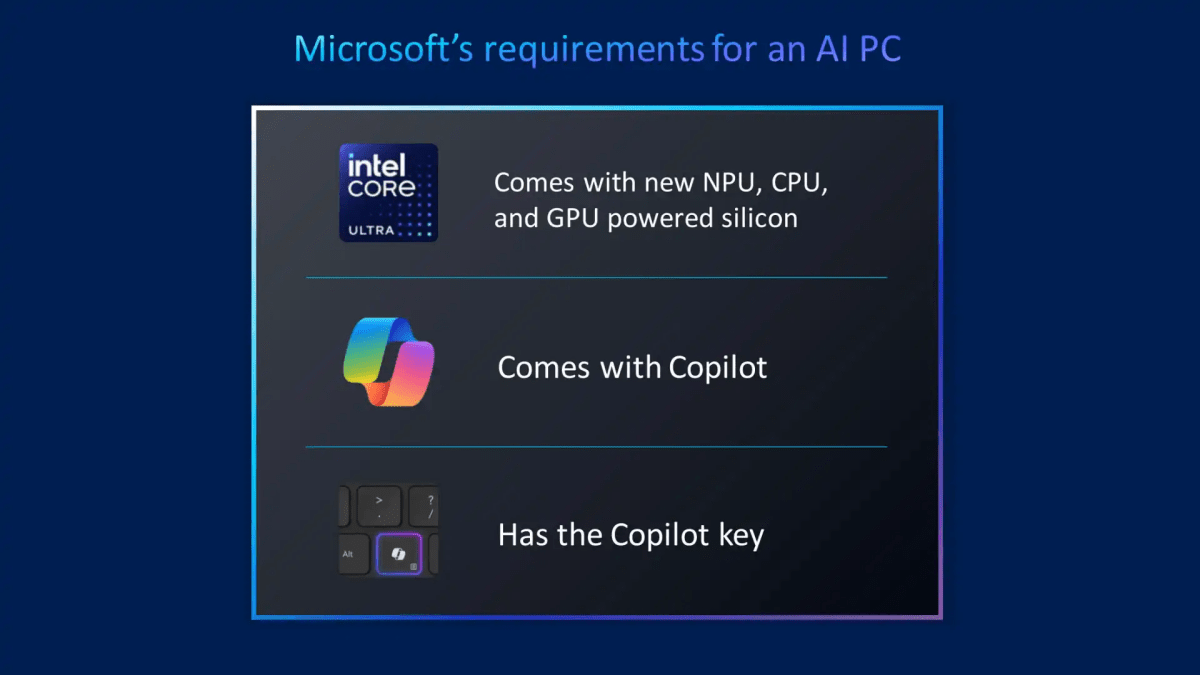

The AI PC’s hardware requirements, according to Microsoft

So we know what an AI PC is supposed to do, and what it’s good for. Is there going to be an approved, official AI PC?

Intel

I think so. Intel executives alluded to it in a conference call, revealing that for now there are three components of an “official AI PC:” a Copilot key, the ability of the PC to run Copilot, and a dedicated NPU inside. Additional reports have said that there is a TOPS requirement of 45 TOPS, but Microsoft hasn’t confirmed this.

If the latter requirement is true, that would mean that PCs with the Qualcomm Snapdragon X Elite chip inside would qualify as AI PCs, but PCs with Intel’s first Core Ultra inside would not. When you consider that Microsoft is expected to launch consumer versions of its Surface Pro 10 and Surface Laptop 5 at its Build conference in May with Snapdragon X Elite chips inside, it all makes sense: this could be the formal unveiling of the AI PC.

Where can I buy an AI PC?

Microsoft hasn’t formally defined what an AI PC is. It’s possible that we’ll eventually see some sort of official badging, like the “Designed for Windows XP” stickers of old.

Every Intel Core Ultra PC and most AMD Ryzen 7000 and 8000 PCs — assuming they have Ryzen AI inside — include an NPU inside that can accelerate AI. But only the very latest (as of this writing) laptops include that Copilot key that Microsoft endorses.

Further reading: The best laptops we’ve tested

NPU, Copilot key, and ships with Microsoft Copilot… the Asus ZenBook 14 OLED is an AI PC.

IDG / Matthew Smith

Should you buy an AI PC?

We still, still don’t know whether Microsoft will run Copilot or a version of Copilot on local PCs or not, and that could be a big selling point for an AI PC — if the experience proves satisfactory. Likewise, we don’t know whether some killer app will, um, surface: will there be something that screams, you’ve got to own this? (My vote so far is for this killer AI-powered audio filtering that just obliterates background noise.)

It’s likelier that Microsoft will drip new AI features into Windows over time, making it more and more compelling. Remember, I think that it’s time to switch to Windows 11, but that’s in part because Microsoft will likely tie AI into its latest feature release of the Windows 11 operating system.

But really, it could be anything: an AI-powered character that is dropped into a hot new game, perhaps powered by Nvidia’s ACE, might prompt an AI-powered gaming shift. Maybe it will be updates to Teams, Zoom, and Google Meet that will all use AI. Something else, perhaps.

AI PCs aren’t here yet, but they will be. We’ll be watching to see what happens, and you should too.