In the past week, we’ve seen several big AI releases: Gemini 2, GPT o1 (full, not preview), and a Llama 3.3 70B model capable of reaching the GPT-4 model plateau in some areas, despite being at a smaller scale.

For businesses, what does any of this mean?

Do they test each (or any)? Run them through their paces? Give them a go at organization-specific tasks? Check them against what’s being used now? Is there a point person who’s digging into this stuff, or a means of communicating what’s being discovered and what’s changing in their field?

At PTP, we’re involved in AI—in-house development, consulting, and recruitment—so in our case, yes, there’s a lot of experimenting going on. But if AI’s not your core concern but rather a new and shifting tool that can do some nifty things but may not yet have clear application in your field, do you still give it attention?

Are you held back by concerns about AI security, identifying practical use cases, benchmarking effectively, cutting through excessive hype to assess value and costs, figuring out how to implement it, managing limited resources, or keeping up with its absurd pace of change?

And what about your competitors?

The McKinsey Global Institute found back in January that for collaboration and management tasks in operations, some 50% of typical tasks can be automated by AI. They believe the boost in potential automation will create an estimated $3.5 to $4 trillion, or around the GDP of the UK, of value in functions like engineering, procurement, supply chain, and customer operations—across industries.

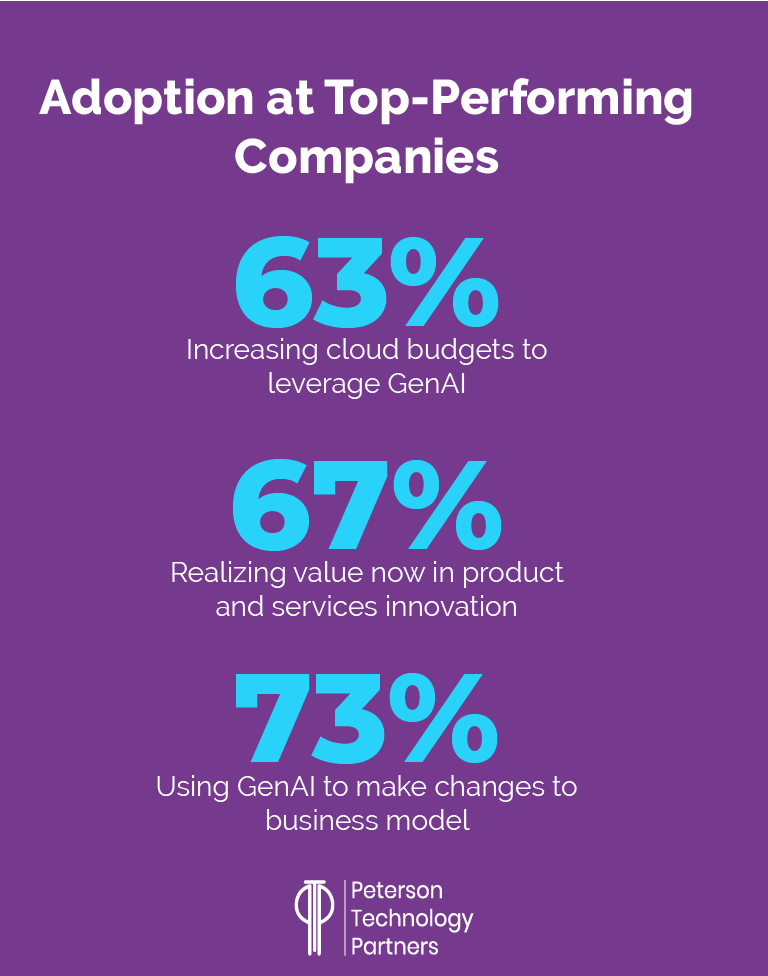

Clearly, the more room an organization has to research and experiment with AI, the more value they will take from it, and more quickly, making it a potentially rich-gets-richer innovation, at least at scale and in these early days.

Still, it comes with the promise of democratizing expertise, helping the least skilled most, and putting practical automation more in reach than ever before.

Scholars estimate that even if no new AI products came out from here, we’d still be processing what we’ve got for another five years or more, both to understand and to bring value from the incredible potential. In this way, it’s fair to say that in most places, it’s still in a research and development phase.

Today’s edition of The PTP Report takes on practical AI implementation challenges as we move into 2025, with a look at the current problems, how organizations can prepare, and some AI automation examples.

Primary Challenges Now

For all the gaudy promises AI brings, it’s still largely being rolled out by tech companies, and while it’s stunning at composition and ideation in the right contexts, often the use cases and realistic integrations remain unclear.

Practically speaking, we hear these a lot:

- What are our realistic use cases?

- How do we customize?

- How do we secure AI systems? Is it a risk to trust and safety?

1. Identifying Relevant Use Cases

There’s Microsoft Copilot, and there’s Salesforce’s Agentforce. Google has AI Overviews, Apple has Intelligence, Adobe’s integrating it, and everywhere we see the term popping up. But aside from compositional autocomplete, how does an organization begin to find valuable AI automation use cases?

As with all digital transformation, as Columbia Business School Professor Rita McGrath recommends on the HBR IdeaCast podcast, it’s best to break it down:

“Instead of launching it like a great big bang and running the risk of a huge failure, you take it more step by step… So it’s building up digital capability but in a very step-by-step kind of way. And that allows the organization to much more readily absorb the change.”

So where does one begin?

The Wharton School’s Ethan Mollick, in his Substack One Useful Thing, lays out some 15 areas where he sees GenAI giving real value right now, starting with work where you’re an expert and can quickly assess the quality of others’ work.

In this way, as in others (such as prompting), it can be helpful to actually think of AI more as a co-worker or an absurdly educated intern, than a traditional software utility or hardware upgrade.

Off-the-shelf solutions excel at summarizing large amounts of info (provided you have error tolerance or sufficient checking), translation-based work (as going between formats or working with forms), companion/assistance work (where you get the big picture but can use detail), situations where you will select the best from numerous options (ala ‘blank page’ breakers), research assistance (such as in coding and legal research), to get a second opinion or varied review on something, to extend or spread out expertise, simulate perspectives, or complete ritualized work.

This includes tasks like standardized reports, dialing or answering phones, scheduling, confirmations, and much repetitive office work.

The more sandboxed the trials, the better, and for ROI, target areas where quick wins (and fails) can be checked against measurable outcomes.

Customer service is often such an area fruit, where satisfaction in some fields is already low, and response times can be long. In healthcare, areas like scheduling, confirmations, and fielding routine questions tie up enough time now that they impact the quality-of-care patients receive, and tasks like invoice-matching in finance departments can save hours of manual labor where there’s already a second check in the case of failures.

Starting with safe tests, areas of rote repetition or where tasks can be documented in extremely stable algorithms, prove excellent starting places, once the kinks are worked out and the workflow is scaled, where businesses are recognizing enormous savings.

On the other side, it remains dangerous in areas where high accuracy is essential, where human expertise is expected and valued, to replace genuine learning, and where not understanding AI’s behavior is too dangerous (such as getting self-serving answers or working to convince others of untruths, in areas of limited perspective or potential bias).

2. Customizing AI-Driven Business Solutions

Public systems can’t be safely used with some proprietary data, but that doesn’t mean you have to train models entirely yourself—pre-trained systems can be customized. Legal firms train models with their own data and case history to interpret contracts and identify potential problems. Recruitment systems work in a similar fashion, working from resumes and requirements, and adjusted by need.

Increasingly AI design patterns also look to separate layers, so that complex tasks can be broken up and handled by a variety of different models as best fits need.

Building custom AI applications is already inexpensive in the right circumstances, as outlined in depth by Andrew Ng in DeepLearningAI’s The Batch. The great expense that we’ve seen play out in the enormous infrastructure build makes semiconductors available at the data center layer, and extends to developers via the cloud, with the expensive initial training done at the layer of big foundational models. Orchestration layers increasingly provide software to coordinate calls to various LLMs and even other APIs, enabling a process that’s increasingly agent-based.

Custom AI applications can be built affordably on top of this, making it easier than ever to experiment and generate initial cost estimates.

And one of the biggest of the AI customization challenges is learning what is and isn’t possible for your needs from what’s available now.

The easiest way to answer more general questions like this is to have a point person, who either checks out new solutions or delegates testing and processes results. And with such subjectivity in measures, there’s no substitute for creating your own benchmarks.

By developing a set of tasks or challenges specific to the work you need done, you can run each new AI solution that arrives through them for comparison, in a process similar to what many existing benchmarks do now.

Even without space or budget for custom development, this can be easily done by a workforce using GenAI solutions by assigning departments to create, check, and share prompt templates specific to their tasks and uses.

In addition to providing necessary information for customization decisions, it has the added bonus of encouraging greater communication, learning, and transparency on companywide AI use.

Here again the benefits of empowering so-called citizen developers in automation among your workforce are significant, if you haven’t already.

[PTP founder and CEO Nick Shah has written recently about nurturing in-house innovation and some of the leadership challenges of AI uncertainty in his newsletters. Check them out for more detail on these topics.]

Your personalized checks can range from taking advantage of full solutions or partners that establish benchmarks for you, open-source testing options you can run yourself (such as promptfoo), or even a bank of prompts and comparison of results along the metrics that make the most sense.

[Check out Blind Selection: The Struggle to Objectively Measure AI from The PTP Report for a look at existing measures and sites that demonstrate how the foundational models compare.]

3. Ensuring Security, Trust and Safety in AI

One immediate concern for most organizations is: How can I be sure this is secure?

We wrote a recent PTP Report on some of the data challenges with AI and potential solutions, so take a look there for a focus on the data component. In general, having clean, usable, quality data is essential to getting good results from AI.

In terms of security, that is an entire report in itself, but is best achieved with clear policy, training, and technical protections all working in concert together.

Examples include:

All AI systems demonstrate bias, and likely always will as they draw from source data or the perspectives of their creators, and this is best addressed with being transparent about use, monitoring for bias, using the most diverse datasets possible, and taking advantage of multiple LLMs, such as by the layers discussed above.

Many organizations that have compliance needs begin with AI readiness assessments (which need use cases), looking at their starting data quality, current skill needs, hallucination and bias risks, and potential data privacy issues such as from the GDPR. Existing US state regulations require bias evaluations, and in this it can be critical to identify where people are going to be needed at key steps in the process, as early as possible.

Automation Examples in Action

There’s using GenAI to improve ideation, content creation, writing, and research.

But then there’s more self-sufficient AI automation. Numerous companies already have AI automation systems at work, such as in banking, where Notion and Kasisto process internal documentation to provide ready knowledge for employees, Objectway which makes back-office support to handle tasks like data entry, and Zocks, building assistant agents with notetaking for your CRM. Eigen Technologies builds AI automated Intelligent Document Processing (IDP) that can read, categorize, and input piles of received paper directly into internal systems.

Amazon is among those automating heavily, from their cloud systems and data centers, to robotics, to using machine learning to optimize inventory and logistics, and AI insights to transform the customer experience on the front end.

In healthcare, companies like PathAI and DaVita are improving diagnostic accuracy with measurable results, while companies like BenchSci speed research. HCA Healthcare has implemented shift-change data sharing to solve a major pain point and ensure information isn’t dropped and critical time isn’t lost handing off.

PTP, like these companies, is putting practical automation into practice. We’ve seen our systems directly improve the quality of healthcare for clients by, for example, automating repetitive services like scheduling and rescheduling, outbound confirmation, and customer service.

From the accessibility of talent to the efficiency of reporting to the transformation of call handling and campaign scaling, AI automation is already practical in areas where there is both fault tolerance, stable algorithms for behavior, and extensive training.

Our Pete & Gabi system, for example, doesn’t replace human workers, but it takes over mundane tasks like dialing, contacting leads, pre-screening candidates, or fielding incoming inquiries.

This has proven to be both a safe and highly effective use case for clients, with the capacity to fully customize not only source scripts, but also the voices themselves, with data-driven personalization, empathetic call flow and endings, and a capacity to escalate or handoff to live staff when needed. All of this is reliable and effective and without putting their data or name at risk.

As a first step, this kind of AI-powered business automation not only saves as much as 94% off the cost of traditional approaches (calling and fielding calls in this case), but also greatly expands reach and the capabilities of an organization to make or field contacts, and even improve the quality of message, and ultimately the service being provided.

Conclusion

What are the best AI adoption strategies and what are realistic ROI expectations from AI solutions? It’s a work in progress, with this data still coming in and numbers getting thrown around that vary wildly.

But one thing that’s immediately obvious is the potential for extensive savings and service improvements are real and they are significant.

Many companies are no doubt lying in wait for the early adopters to blaze the trail and work out flaws, and for the failures and limitations to become clear, as the hype, like smoke, clears.

But things are moving very fast. 2025 is supposed to be the year of AI agents, and most of the AI luminaries don’t seem to be budging on their predictions on AGI, unless it’s to move them up sooner than expected.

Still, as new products are released and billions on billions are spent, as the news fills with reports of wild power consumption, proliferating data centers, prize-winning scientific breakthroughs, and dangerous misinformation capabilities, the question remains: Are most companies really moving yet on AI?

Are they considering use cases, setting benchmarks, cleaning up and preparing their data, readying their infrastructure, or even using available public tools to understand potential benefits?

A better question may be, despite all the concerns, and with so much at stake, can they afford not to?

References

Google (GOOGL) Reveals New AI Model in Race with OpenAI, Nasdaq

Generative AI in operations: Capturing the value, McKinsey & Company Podcast

Agentic Design Patterns Part 2, Reflection, The Batch

The Falling Cost of Building AI Applications, The Batch

Are You Ready for AI Agents?, VentureBeat

15 Times to use AI, and 5 Not to, One Useful Thing Substack

Digital Transformation, One Discovery at a Time, HBR IdeaCast Episode 738

9 ways AI can make your banking job easier, Fast Company