Artificial Intelligence & Machine Learning , Next-Generation Technologies & Secure Development

What Enterprises Need to Know About Agentic AI Risks

Mitigating Cybersecurity, Privacy Risks for New Class of Autonomous Agents

Many organizations are looking to artificial intelligence agents to autonomously perform tasks that surpass traditional automation. Tech firms are rolling out agentic AI tools that can handle customer-facing interactions, IT operations and a variety of other processes, but experts are cautioning security teams to watch for cyber and privacy risks.

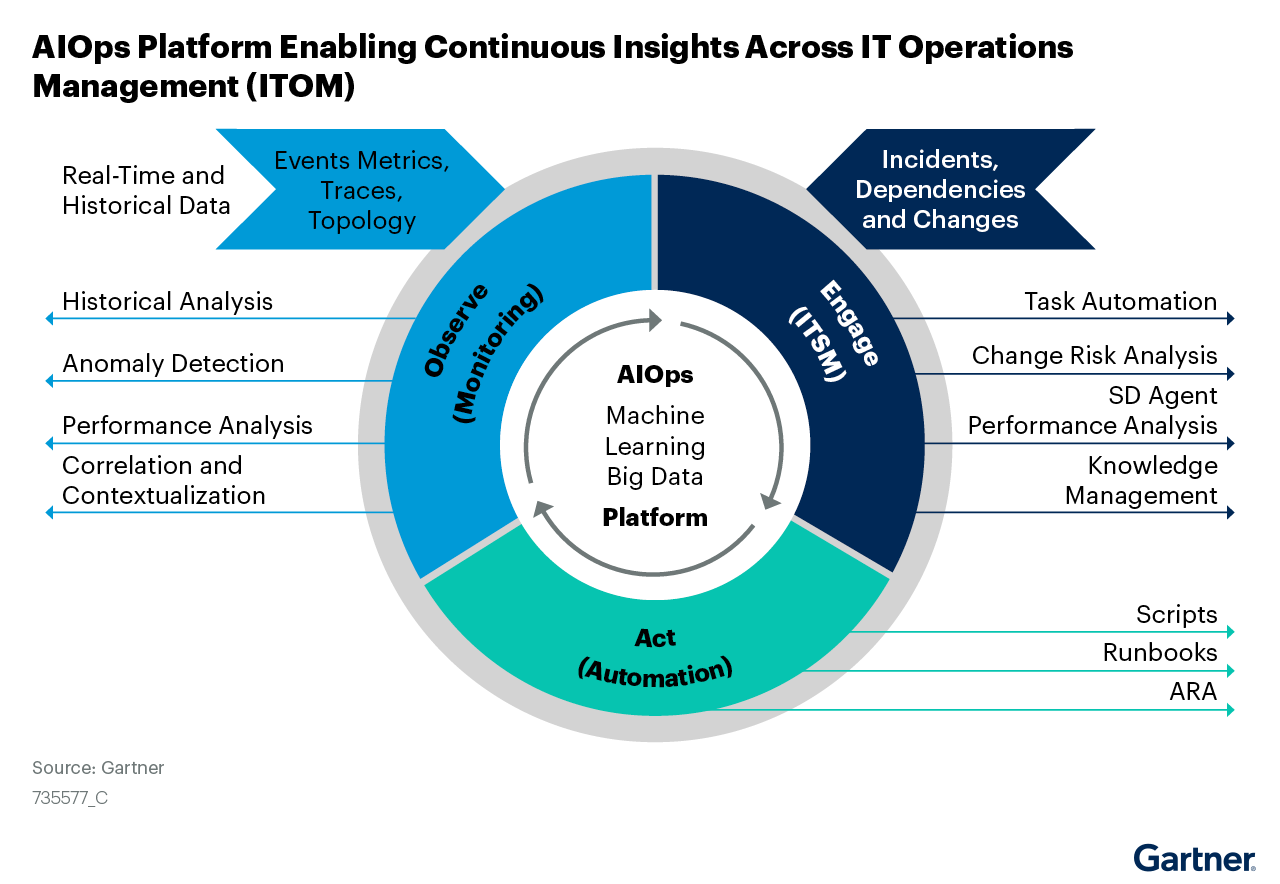

AI agents analyze large datasets, identify patterns and generate actionable insights, said Avivah Litan, vice president and distinguished analyst at Gartner, adding that they can manage customer service inquiries, escalate issues and even offer personalized recommendations. In IT operations, AI agents can detect system anomalies and initiate corrective measures. By automating these tasks, enterprises can potentially increase efficiency and redirect human resources toward strategic goals.

Agents, the latest hype in the AI universe, connect to other systems and receive functionality to make decisions. Companies including Google, Nvidia and Anthropic have announced new tools in recent months, with OpenAI CEO Sam Altman hinting that AI agents are expected to enter workforce this year.

But the growing availability of AI agents comes with a downside: heightened security risks. In contrast to traditional AI applications that are already within controlled parameters, AI agents interact with multiple systems and external data sources. This can lead to a broader attack surface, unauthorized access and data leakage. Weak or poor systems integration has previously resulted in the leakage of sensitive information by AI agents, while coding flaws or malicious interference can trick an AI agent into taking unintended to disrupt business or lead to financial loss, Litan said.

AI agents are more useful than traditional automation, but the advantage comes at a cost.

Traditional automation techniques are based on predefined rules that govern the behavior of a system, while AI agents run on training data and instructions.

David Brauchler, CTO of cybersecurity firm NCC Group, explains: When condition X is met, then perform action Y - that's traditional automation. AI is inherently qualitative, so it represents a statistical relationship between its input and output. Classic automation relies on the effectiveness of its rules for correctness, while large language model-based automation relies on the effectiveness of its training process and prompt. The latter can increase the probability of correct behavior, but does not offer any security or quality guarantees. So, the effectiveness of AI agents depends on a tradeoff between low-flexibility, high-assurance workloads in classic automation to high-flexibility, low-assurance workloads in agentic automation.

AI agents also often serve as gateways to sensitive data and critical systems, and thus their autonomous capabilities pose unique risks compared to just running any application that uses AI, he added. For example, a compromised logistics agent could propagate malicious commands across a supply chain, causing widespread disruption.

Brauchler told Information Security Media Group the dynamic and interconnected nature of AI agents makes real-time threat detection challenging, often outpacing traditional security measures. AI application developers typically have the misconception that AI vulnerabilities are self-contained in models and once those flaws are addressed, the technology is safe to incorporate into a broader platform.

"But the most severe and impactful vulnerabilities actually come when connecting these technologies to other systems. The closer an application creeps toward providing a model with agentic capabilities, the more security vulnerabilities it introduces," Brauchler said.

To counter this, he advises a comprehensive threat modeling plan to assess the risks of certain types of deployments. Architectural controls such as least-privilege access and dynamic capability shifting can also safeguard systems. "These separate trusted and untrusted zones in a system can significantly reduce the risk of accidental actions," he said. Doing so enables detection and response to anomalies by monitoring agent activity and maintaining immutable audit trails, he said.

Developing safe AI agents also depends on collaboration among developers, vendors and users. "It's shared responsibility," Litan said. "If security issues are not holistically addressed, it can bring about data breaches, financial loss and damage to reputation."

Businesses have attempted for years to shift risk away from themselves and onto AI developers by asking for guardrails that minimize the chance of LLMs violating their intended directives, Brauchler said. But guardrails are not hard security controls, and are pacesetters for risk, not mitigations. "In reality, there are no guardrails that can prevent threat actor-controlled inputs from manipulating an AI model's behavior and executing malicious actions," he said. "Organizations that implement AI without architecting their applications to account for the uncertainty of AI behavior are asking for trouble."

Experts recommend revisiting the security architectures of old to counter the unique challenge that AI agents present.

Brauchler explains, practically, what that architecture could look like: Security issues plaguing technology for 30+ years can be traced to the principle of data-code separation. But for LLMs, data is code. So rather than a patch-first approach, a data trust approach should be considered. That means in the case of exposing an LLM to untrusted data, its capabilities or the function calls should be lowered down to the least trusted data it receives.

For instance, an IT admin agent should not be able to access administrative functionality so long as untrusted data is within its context window when it is exposed to tickets from low-privilege users. Untrusted agents can still be useful to applications by parsing the data, creating a plan of action and passing the data to trusted, classic systems to manage the data. By controlling the exposure of data to only trusted models, application architects can group LLMs into trusted zones and untrusted zones, he said.

For example, if an IT admin asks an agent to make an urgent ticket list. The trusted agent reads and parses the request, determines that interaction with untrusted data is expected and dispatches the "parse tickets" task to the untrusted agent. The untrusted agent parses the tickets, determines whether it is urgent, and then passes the backend code a yes or no for each ticket. The code then returns the yes/no list to either the trusted agent or the administrator directly. And this architecture provides a security guarantee in that malicious tickets can, at worst, mark themselves urgent, and have no capacity to compromise the administrator.

To deploy AI agents safely, experts advise following a structured approach with security assessments and AI-specific threat models, such as Models-As-Threat-Actors. Continuous monitoring and anomaly detection are important to preempt the unauthorized actions of AI agents, while governance policies with data access restrictions and clear incident response protocols help boost security.

"Agentic AI requires completely new architectural considerations, and developers who are not actively thinking about their environments in terms of trust, data and dynamic capability shifting are likely to inadvertently introduce security vulnerabilities," Brauchler said.