A group of researchers noticed something strange in certain scientific papers involving small rodents (like rats or mice): the number of animals reported at the start of an experiment often did not match the number of animals at the end. This can have a significant impact on experimental results, and yet it turns out to be surprisingly common.

A new paper in PLOS Biology takes a look at the efforts of a team of scientists, led by Ulrich Dirnagl at Charité Universitätsmedizin in Berlin, Germany, to ferret out the many rodents that go missing from lab studies—at least on paper.

It’s called “attrition.” An animal gets sick and dies due to causes unrelated to the study, or it behaves in some way that makes it an impossible subject. The problem comes when there is too much attrition, biased attrition, or unreported attrition—and through their efforts the German team has come to believe that many such lab studies are plagued by all three.

The group ran simulations of studies conducted using groups of eight treated animals and eight untreated control animals—roughly what someone would do for a preliminary study. Then they simulated the errors that a study might make due to random attrition and biased attrition. Biased attrition isn’t necessarily deliberate cheating or lying. Honest researchers who want, or expect, to see a result may write off side effects of a drug as behavioral problems, or significant deaths as random.

The researchers found that random attrition does not boost a false positive result, but in some cases it can support a false negative result. That’s because these types of studies start out small. Throw out animals and their statistical power—that is, their ability to detect an effect—diminishes rapidly.

Biased attrition increased the positive detection rate, in the most severe cases, from 37-percent to 80-percent. It pays to throw out “outlier” data that doesn’t show researchers what they want to see.

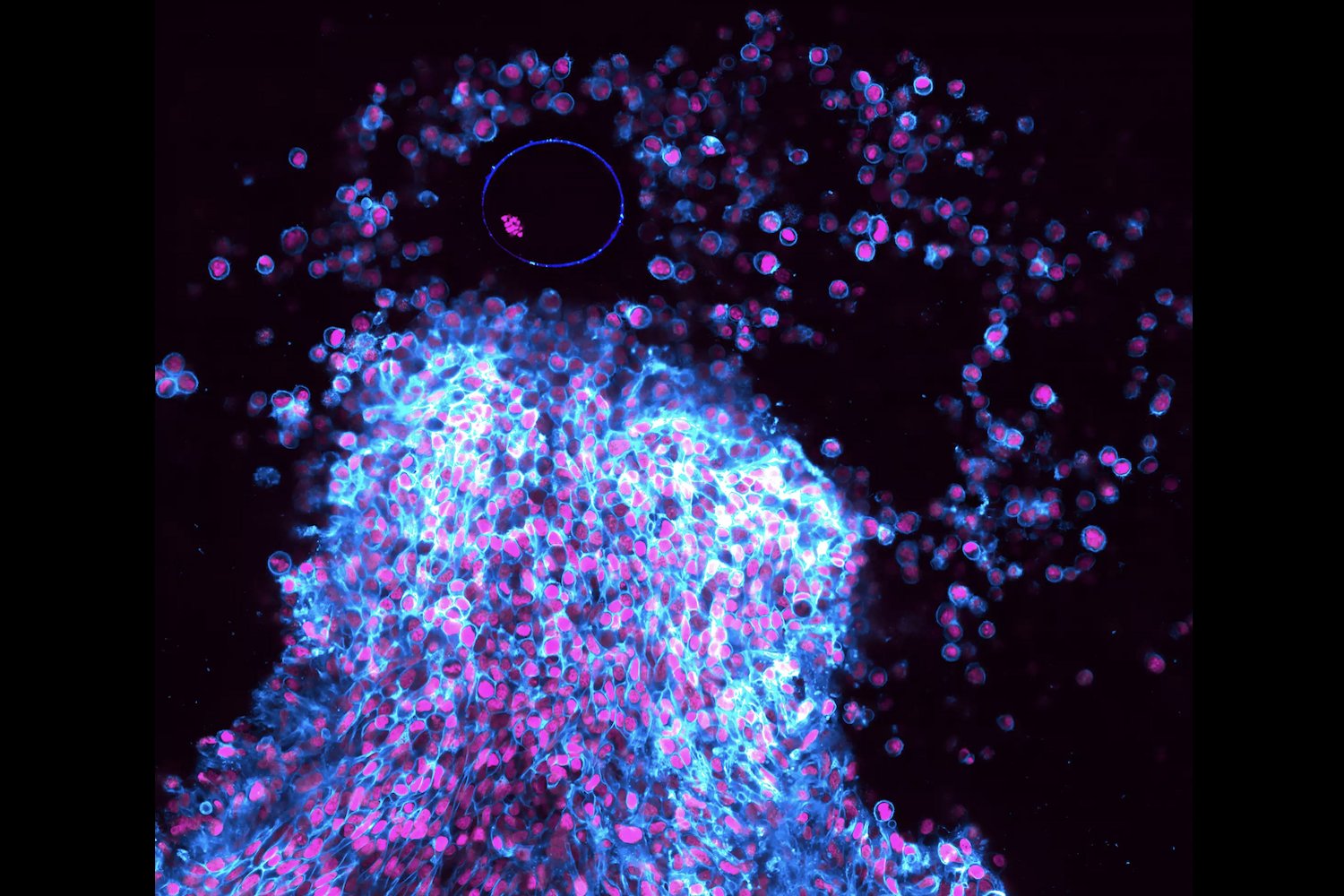

So how likely is that we’re being guided by biased attrition? We have no idea. The researchers looked at a group of studies done on cancer and stroke research, sorting them into categories. More than half of the papers ended up in the “unclear” category, meaning that it wasn’t clear if the number of animals described in the “method” section matched the number in the “results” section.

Next most common was the “matched” category—in which the number of animals clearly matched for both sections. Lastly, there came the “attrition,” category, in which it was clear that some animals were eliminated. These had to be further subdivided into “explained” and “unexplained,” because only some papers explained why animals had been eliminated.

Finally, the researchers looked at whether the treated group of animals ended up being better off than the untreated group. In all of the categories it did, with the “matched” category having the biggest effect for both cancer and stroke, the Attrition coming in second for size of the effect for cancer and third for stroke, and Unclear coming in second for stroke and third for cancer.

The researchers aren’t sure about the “matched” category. They can only go by what’s in the papers, and can’t be sure the “matched” category wasn’t winnowed down before the experiment started.

Whatever the case, the fact that so many of the experiments fell into the unclear category is a problem. Not only does it mean that the results may be inaccurate, it’s not even possible to really check them.

[Experimental Research on Cancer and Stroke]

Images via Wikimedia