There are people who argue, persuasively, that Hollywood films are worse than they used to be. Or that novels have turned inward, away from the form-breaking gestures of decades past. In fact, almost anything can be slotted into a narrative of decline—the planet, most obviously, but also (per our former president) toilets and refrigerators. One of the few arenas immune to this criticism is science: I doubt there are very many people nostalgic for the days before the theory of relativity or the invention of penicillin. Over the centuries, science has just kept racking up the wins. But which of these wins—limiting ourselves to the last half-century—mattered most? What is the most important scientific development of the last 50 years? For this week’s Giz Asks, we reached out to a number of experts to find out.

Felicitas Heßelmann

Research Assistant, Social Sciences, Humboldt University of Berlin

A bit more than 50 years ago, but I would say the most influential were the related developments of the Journal Impact Factor and the Science Citation Index (precursor of today’s Web of Science) by Eugene Garfield and Irving H. Sher between 1955 and 1961.

These developments laid the groundwork for current regimes of governance and evaluation in academia. Their influence on the structure of science as we know it can hardly be overestimated: Today, it is difficult to imagine any funding, hiring, or publication decision that does not draw in some way either directly on the JIF or data from the Web of Science, or at least on some other form of quantitative assessment and/or large-scale literature database. Additionally, the way we engage with academic literature and hence how we learn about and build on research results has also fundamentally been shaped by those databases.

As such, they influence which other scientific developments were made possible in the last 50 years. Some groundbreaking discoveries might have only been possible under this regime of evaluation of the JIF and the SCI, because those projects might not have been funded under a different regime—but also, it’s possible that we missed out on some amazing developments because they did not (promise to) perform well in terms of quantitative assessment and were discarded early on. Current debates also highlight the perverse and negative effects of quantitative evaluation regimes that place such a premium on publications: goal displacement, gaming of metrics, and increased pressure to publish for early career researchers, to name just a few. So while those two developments are extremely influential, they are neither the only nor necessarily the best possible option for academic governance.

Robert N. Proctor

Professor, History of Science, Stanford University, whose research focuses on 20th century science, technology, and medicine

That would surely be the discovery and proof of global warming. Of course, pieces of that puzzle were figured out more than a century ago: John Tyndall in the 1850s, for example, showed that certain gases trap rays from the sun, keeping our atmosphere in the toasty zone. Svante Arrhenius in 1896 then showed that a hypothetical doubling of CO2, one of the main greenhouse gases, would cause a predictable amount of warming—which for him, in Sweden, was a good thing.

It wasn’t until the late 1950s, however, that we had good measurements of the rate at which carbon was entering our air. A chemist by the name of Charles Keeling set up a monitoring station atop the Mauna Loa volcano in Hawaii, and soon thereafter noticed a steady annual increase of atmospheric CO2. Keeling’s first measurements showed 315 parts per million and growing, at about 1.3 parts per million per year. Edward Teller, “father of the H-bomb,” in 1959 warned oil elites about a future of melting ice caps and Manhattan under water, and in 1979 the secret sect of scientists known as the Jasons confirmed the severity of the warming we could expect. A global scientific consensus on the reality of warming was achieved in 1990, when the Intergovernmental Panel on Climate Change produced its first report.

Today we live with atmospheric CO2 in excess of 420 parts per million, a number that is still surging every year. Ice core and sea sediment studies have shown that we now have more carbon in our air than at any time in the last 4 million years: the last time CO2 was this high, most of Florida was underwater and 80-foot sharks with 8-inch teeth roamed the oceans.

Coincident with this proof of warming has been the recognition that the history of the earth is a history of upheaval. We’ve learned that every few million years Africa rams up against Europe at the Straits of Gibraltar, causing the Mediterranean to desiccate—which is why there are canyons under every river feeding that sea. We know that the bursting of great glacial lakes created the Scablands of eastern Washington State, but also the channel that now divides France from Great Britain. We know that the moon was formed when a Mars-sized planet crashed into the earth and that the dinosaurs were killed by an Everest-sized meteor that slammed into the Yucatan some 66 million years ago, pulverizing billions of tons of rock and strewing iridium all over the globe. All of these things have been only recently proven. Science-wise, we are living an era of neo-geocatastrophism.

Two things are different about our current climate crisis, however.

First is the fact that humans are driving the disaster. The burning of fossil fuels is a crime against all life on earth, or at least those parts we care most about. Pine bark beetles now overwinter without freezing, giving rise to yellowed trees of death. Coral reefs dissolve, as the oceans acidify. Biodisasters will multiply as storms rip ever harder, and climate fires burn hotter and for longer. Organisms large and small will migrate to escape the heat, with unknown consequences. The paradox is that all these maladies are entirely preventable: we cannot predict the next gamma-ray burst or solar storm, but we certainly know enough to fix the current climate crisis.

The second novelty is the killer, however. For unlike death-dealing asteroids or gamma rays, there is a cabal of conniving corporations laboring to ensure the continued burning of fossil fuels. Compliant governments are co-conspirators in this crime against the planet—along with “think tanks” like the American Petroleum Institute and a dozen-odd other bill-to-shill “institutes.” This makes the climate crisis different from most previous catastrophes or epidemics. It is as if the malaria mosquito had lobbyists in Congress, or Covid had an army of attorneys. Welcome to the Anthropocene, the Pyrocene, the Age of Agnotology!

So forget the past fifty years: the discovery of this slow boil from oil could well become the most important scientific discovery in all of human history. What else even comes close?

Hunter Heyck

Professor and Chair, History of Science, The University of Oklahoma

I’d say the best candidate is the set of ideas and techniques associated with sequencing genes and mapping genomes.

As with most revolutionary developments in science, the genetic sequencing and mapping revolution wasn’t launched by a singular discovery; rather, a cluster of new ideas, tools, and techniques, all related to manipulating and mapping genetic material, emerged around the same time. These new ideas, tools, and techniques supported each other, enabling a cascade of continuing invention and discovery, laying the groundwork for feats such as the mapping of the human genome and the development of the CRISPR technique for genetic manipulation. Probably the most important of these foundational developments were those associated with recombinant DNA (which allow one to experiment with specific fragments of DNA), with PCR (the polymerase chain reaction, used to duplicate sections of DNA precisely, and in quantity), and with gene sequencing (used to determine the sequences of base pairs in a section of DNA, and thus to identify genes and locate them relative to one another). While each of these depended upon earlier ideas and techniques, they all took marked steps forward in the 1970s, laying the foundation for rapid growth in the ability to manipulate genetic material and to map genes within the larger genomes of individual organisms. The Human Genome Project, which officially ran from 1990-2003, invested enormous resources into this enterprise, spurring startling growth in the speed and accuracy of gene sequencing.

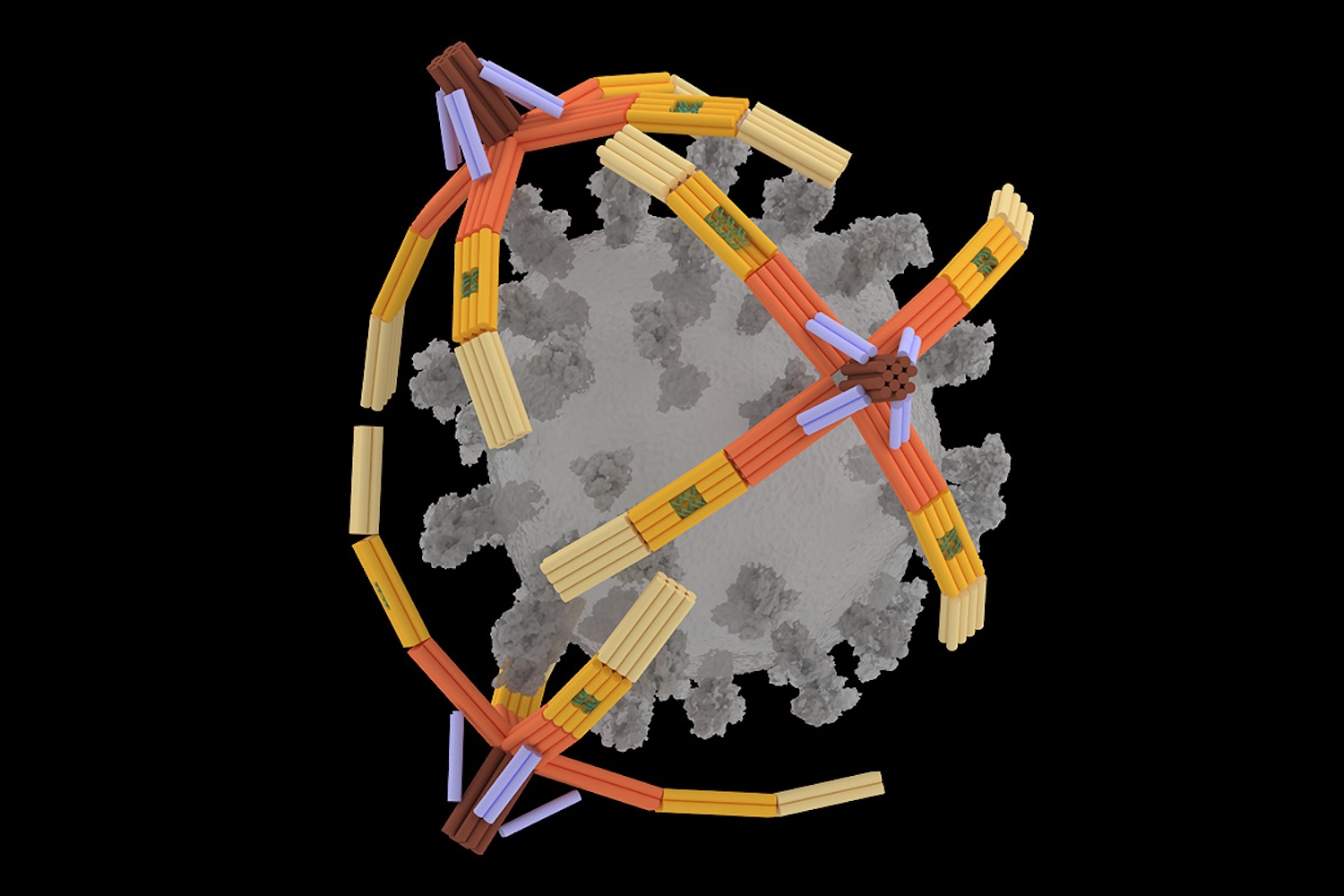

The ramifications of this cluster of developments, both intellectual and practical, have been enormous. One the practical side, the use of DNA evidence in criminal investigation (or in exonerating the wrongly convicted), is now routine, and the potential for precise, real-time genomic identification (and surveillance) is being realized at a startling pace. While gene therapies are still in their infancy, the potential they offer is tantalizing, and ‘genomic medicine’ is growing rapidly. Pharmaceutical companies now request DNA samples from individual experimental subjects in clinical trials in order to correlate drug efficacy with aspects of their genomes. And, perhaps most important of all, the public health aspects of gene sequencing and mapping are stunning: the genome of the SARS-2 Coronavirus that causes Covid-19 was sequenced by the end of February 2020, within weeks of the realization that it could pose a serious public health threat, and whole-genome analysis of virus samples from around the world, over time, have enabled public health experts to map its spread and the emergence of variants in ways that would have been unthinkable even a decade ago. The unique aspects of the virus that make it so infectious were identified with startling speed, and work on an entirely new mode of vaccine development began, leading to the development, testing, and mass production of a new class of vaccines (mRNA vaccines) of remarkable efficacy, in unbelievably short time—less than a year from identification of the virus to approval and wide use. It is hard to overstate how amazing this novel form of vaccine development has been, and how large its potential is for future vaccines.

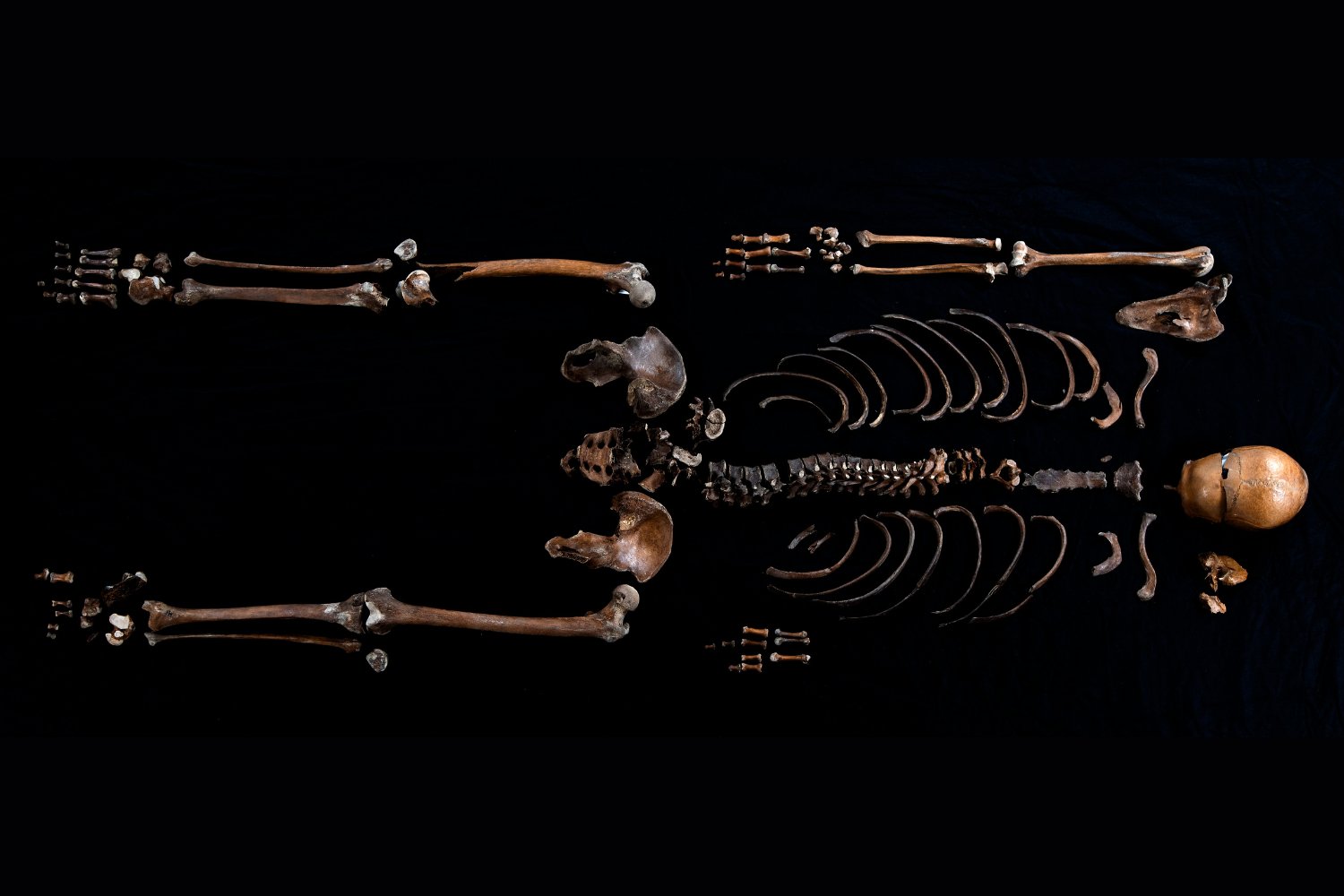

On the intellectual/cultural side, the collection of techniques for manipulating and mapping genetic material is challenging longstanding ideas about what is natural and about what makes us human. Organic, living things now can be plausibly described as technologies, and that’s an unsettling thing. Aspects of our individual biological identities that once were ‘givens’ are increasingly becoming choices, with implications we are just beginning to see. In addition, these same techniques are being deployed to reconstruct our understanding of evolutionary history, including our own evolution and dispersal across the globe, and perhaps nothing is more significant than changing how we understand ourselves and our history.

Jon Agar

Professor, Science and Technology Studies, University College London, who researches the history of modern science and technology

My answer would be PCR—Polymerase Chain Reaction. Invented by Kary Mullis at the Cetus Corporation in California in 1985, it’s as important to modern genetics and molecular biology as the triode and the transistor to modern electronics. Indeed it has the same role: it’s an amplifier. DNA can be multiplied. It’s a DNA photocopier. Without it, especially once automated, much modern genetics would be extremely time-consuming, laborious handcraft, insanely expensive, and many of its applications would not be feasible. It enables sequencing and genetic fingerprinting, and we have it to thank for COVID tests and vaccine development. Plus, you can turn it into a fantastic song by adapting the lyrics to Sleaford Mods’ “TCR.” Singalong now: P! C! R! Polymerase! Chain! Reaction!